//php echo do_shortcode(‘[responsivevoice_button voice=”US English Male” buttontext=”Listen to Post”]’) ?>

Within the newest spherical of MLPerf inference benchmark scores, chip and server makers and cloud suppliers confirmed off efficiency of top-of-the-range {hardware} for the newly launched 6B-parameter, GPT-J, massive language mannequin (LLM) inference benchmark, which is designed to point system efficiency on LLMs like Chat-GPT. Nvidia confirmed off the efficiency of its Grace Hopper CPU-GPU superchip, and Intel’s Habana Labs demonstrated LLM inference on its Gaudi2 accelerator {hardware}. Google additionally previewed its just lately introduced TPU v5e chip efficiency for LLM workloads.

Nvidia

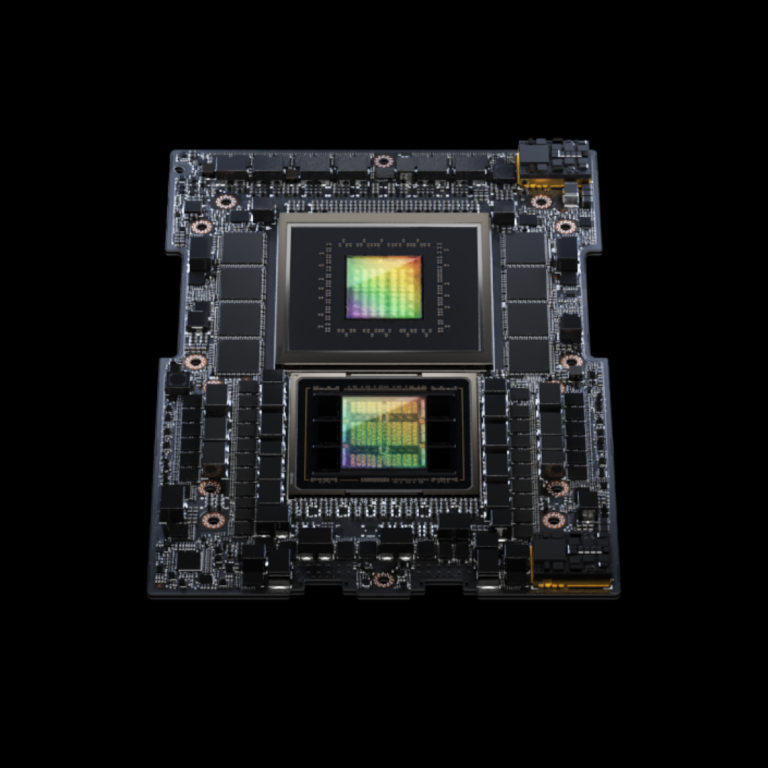

Nvidia’s CPU-GPU superchip Grace Hopper (GH200) made its debut this spherical. Grace Hopper options Nvidia’s 72-Arm Neoverse v2 -core CPU, Grace, related to a Hopper H100 die with Nvidia’s proprietary C2C hyperlink connection. Nvidia says C2C is 7x sooner than PCIe, which has traditionally been a bottleneck. New to Grace Hopper versus current H100 programs is a memory-coherent design, permitting the GPU direct entry to 512 GB of power-efficient LPDDR5X CPU reminiscence. That is additionally the primary Nvidia superchip to make use of HBM3e, which is 50% sooner than HBM3, and there may be extra of it (96GB).

Grace Hopper’s inference scores improved on the H100 with Intel Xeon system submitted, by between 2-17% throughout the workloads. The largest benefit was on advice, whereas the smallest was on the brand new LLM benchmark, the place Grace Hopper’s benefit was marginal (simply 2% within the offline server state of affairs) versus the H100 and Intel Xeon system.

“As LLMs go, it’s a reasonably small LLM,” Nvidia’s Dave Salvator advised EE Occasions. “As a result of it doesn’t exceed the 80GB [of HBM] now we have on the H100, the efficiency delta was comparatively modest.”

Grace Hopper can inference 10.96 queries per second in server mode or 13.34 in offline mode for the GPT-J LLM benchmark.

A brand new function known as “computerized energy steering” permits the Hopper die to run with a sooner clock frequency if the ability is offered, on a dynamic foundation.

“If the GPU will get very busy, and the CPU is comparatively quiet, we are able to shift energy price range over to the GPU to permit it to supply further efficiency,” Salvator stated. “By having that energy headroom, we are able to preserve higher frequency residency all through the workload and thereby ship extra efficiency.”

Nvidia additionally debuted the L4 on this spherical. It’s primarily based on its Ada Lovelace structure, which is designed to speed up each graphics and AI workloads like video analytics. This can be a PCIe card with GPU thermal design energy (TDP) of 72 W, which doesn’t want a secondary energy connection. On LLMs, the L4 had about one-tenth the efficiency of the H100, however improved to round one-fifth the H100 scores for imaginative and prescient workloads like ResNet.

Intel

Intel’s Habana Labs confirmed aggressive outcomes for its Gaudi2 coaching accelerator on the LLM benchmark versus Nvidia’s Hopper. For GPT-J at 99.0 accuracy Gaudi2’s scores have been inside 10% of Grace Hopper’s for the server state of affairs and 22% for the offline state of affairs. Gaudi2 options 96GB of HBM2e reminiscence – the identical capability as Grace Hopper however with the earlier technology of HBM, so not as quick and with much less bandwidth. Habana entered Gaudi2, its coaching chip, so it may make the most of its floating level functionality for LLM inference (Habana’s Greco inference chip is integer-only).

Intel additionally submitted LLM inference outcomes for its CPUs. Twin Intel Xeon Platinum 8480+ (Sapphire Rapids) can inference 2.05 queries per second in offline mode, whereas twin Intel CPU Max (Sapphire Rapids with 64 GB of HBM) CPUs can handle 1.3.

Google submitted preview scores for its latest technology of AI accelerator silicon, the TPUv5e. The v5e is designed to enhance efficiency per greenback in contrast with the TPUv4, in response to Google, and it’s accessible now in Google’s cloud, in as much as 256-chip pods. Every chip gives 393 TOPS at INT8 and the pod makes use of Google’s proprietary inter-chip interconnect (ICI).

4 TPUv5es can inference 9.81 offline samples per second, in contrast with 13.07 for one Nvidia H100; normalising to per-accelerator efficiency places H100’s efficiency at about 5x.

Google’s personal performance-per-dollar calculations primarily based on these figures present an enchancment of two.7x for TPUv5e versus the earlier technology, all the way down to an optimized inference software program stack primarily based on DeepMind’s SAX and Google’s XLA compiler. Google has been engaged on fusing transformer operators and post-training weight quantization to INT8, and sharding methods, amongst different issues.