In case you missed Satya Nadella’s keynote, here’s a abstract of huge array of stories and my views. Huge influence on MSFT, AMD, AWS, and NVDA.

CEO Satya Nadella offered a keynote full of new product bulletins. In case you didn’t watch it, right here’s the information in a nutshell, adopted by my views.

- New hollow-fiber optical cable for low-energy, high-performance networking

- New Microsoft Maia AI Accelerator: higher than AWS, however much less HBM reminiscence than NVIDIA and AMD for big AI mannequin coaching and inference

- New AMD MI300 situations for Azure: A severe challenger to NVIDIA H100

- New NVIDIA H200 situations coming: extra HBM reminiscence

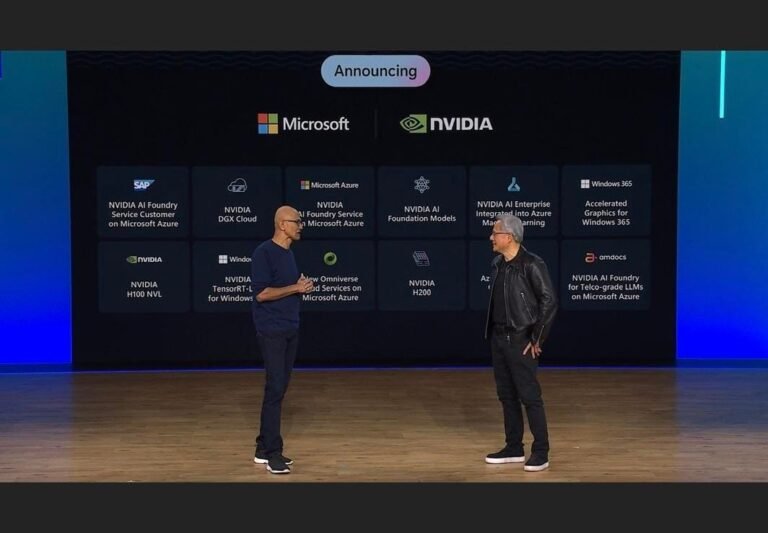

- New NVIDIA AI foundry providers (And Jensen on stage!)

- New (okay, that phrase is getting outdated right here 😉 Microsoft Cobalt Arm CPU for Azure: 40% sooner than incumbent Ampere Altra

Particulars and views

Maia 100

First, Maia shouldn’t be the rumored Chiplet Cloud Structure for inference processing. However it’s a good begin for Microsoft, if for no different purpose than to provide Microsoft extra pricing leverage with NVIDIA and AMD. And it has ample efficiency for a lot of inner workloads. MSFT has upped its semiconductor recreation significantly. Whereas not management general, they’re now aggressive, particularly with Amazon, which appears to be far behind in efficiency.

The 2 new silicon platforms from Microsoft.

Microsoft

Maia is constructed on TSMC 5nm, and has sturdy TOPS and FLOPS, however was designed earlier than the LLM explosion (it takes ~3 years to develop, fab, and take a look at an ASIC). It’s large, with 105 B transistors (vs. 80B in H100). It cranks out 1600 TFLOPS of MXInt8 and 3200 TFLOPS of MXFP4. Its most important deficit is that it solely has 64 GB of HBM however a ton of SRAM. Ie. It appears like it’s designed for older AI fashions like CNNs. Microsoft went with solely 4 stacks of HBM as a substitute of 6 like Nvidia and eight like AMD. The second technology Reminiscence bandwidth is 1.6 TB/s, which beats out AWS Trainium/Inferentia at 820 GB/s and is effectively underneath NVIDIA, which has 2×3.9 TB/s.

AI efficiency will Depend upon what you’re doing. For LLM coaching, each NVIDIA and AMD ought to beat it handily. Inference for bigger fashions can even carry out a lot better on GPUs, however for smaller fashions like enterprises shall be utilizing, it must be fairly ample.

Cobalt 100

The Cobalt 100 CPU follows and maybe replaces for all sensible functions the Ampere Arm CPU in Azure. It brings 128 Neoverse N2 cores on Armv9 and 12 channels of DDR5. Arm Neoverse N2 delivers 40% increased efficiency versus Neoverse N1. Microsoft constructed Cobalt 100 utilizing Arm’s Neoverse Genesis CSS (Compute Subsystem) Platform.

No benchmarks have been offered, sadly, however the CPU has a ton of cores and large reminiscence bandwidth to feed them. I’d count on it to outperform Ampere significantly, and that’s actually the competitors right now.

NVIDIA Basis Companies and H200 on Azure

Microsoft and NVIDIA have been partnering for years. In contrast to many different Cloud Service Suppliers, Azure helps all of NVIDIA’s know-how with out change, adopting the main {hardware}, networking, and software program that NVIDIA has ridden to market management.

The newly introduced NVIDIA Foundry Companies on Azure supplies an end-to-end assortment of NVIDIA AI Basis Fashions, NVIDIA NeMo framework and instruments, NVIDIA AI Enterprise and NVIDIA DGX Cloud AI supercomputing — accessible for startups and enterprises to construct and deploy customized AI fashions on Microsoft Azure. As well as, Microsoft introduced the brand new NVIDIA H200 GPU as a service, accessible in 2Q 2024 and TensorRT-LLM on Home windows.

The H200 was not a shock; they already introduced GH200 so taking off the Grace CPU wasn’t exhausting 😉. NVIDIA H200 and GH200 will dominate the market in 2024, regardless of all the good engineering at Microsoft, given NVIDIA’s ecosystem, CUDA, and large adoption of H100. I stand by my projection that NVIDIA will nonetheless account for 80% market share in 2024, however time will inform, particularly in 2025.

AMD MI300 Preview on Azure

As anticipated, Microsoft has additionally determined to convey the upcoming AMD MI300 Intuition GPU onto Azure, providing prospects early entry to what might develop into a significant various to NVIDIA GPUs. This can be a key transfer for each Azure and AMD, as many builders wish to get their fingers on this new know-how, which ought to assist speed up the wanted software program growth on the MI300. Due to Microsoft, everybody will rush out to check it, and we are going to know far more and sooner.

We expect the MI300 could also be finest fitted to enterprises seeking to decrease inference prices, however it’s going to additionally carry out effectively for coaching. We hope AMD will embrace some benchmarks of their December launch.

Conclusions

Microsoft is taking a Switzerland method to AI acceleration, supporting its Maia 100 platform for inner use (because it does for OpenAI), and industry-leading platforms from NVIDIA and now AMD for cloud providers, because it does right now on the software program entrance with Llama2 from Meta. Azure helps Intel, AMD, and Arm for CPUs, providing prospects a variety of selections to fulfill their particular efficiency and value necessities.

We imagine this method clearly units Microsoft other than its CSP rivals.

Observe me on Twitter or LinkedIn. Take a look at my web site.