A bunch of researchers from Nvidia have developed a brand new approach known as Tied-LoRA, which goals to enhance the parameter effectivity of the Low-rank Adaptation (LoRA) methodology. The course makes use of weight tying and selective coaching to search out the optimum steadiness between efficiency and trainable parameters. The researchers carried out experiments on totally different duties and base language fashions and located that there are trade-offs between effectivity and efficiency.

Current advances in parameter-efficient fine-tuning methods embody LoRA, which reduces trainable parameters via low-rank matrix approximations. AdaLoRA is an extension of LoRA that introduces dynamic rank adjustment and combines adapter tuning with LoRA. One other approach is VeRA, proposed by Kopiczko, which reduces parameters via frozen matrices and trainable scaling vectors. QLoRA makes use of quantized base fashions to realize memory-efficient LoRA. This examine applies weight tying to low-rank weight matrices, additional enhancing parameter effectivity.

In addressing the computational expense of fine-tuning LLMs for downstream duties, Tied-LoRA is a novel strategy that mixes weight tying and selective coaching to reinforce the parameter effectivity of LoRA. It explores totally different parameter coaching/freezing and weight-tying mixtures via systematic experiments on numerous research and base language fashions. The researchers determine a selected Tied-LoRA configuration that achieves comparable efficiency whereas using solely 13% of the parameters in comparison with the usual LoRA methodology.

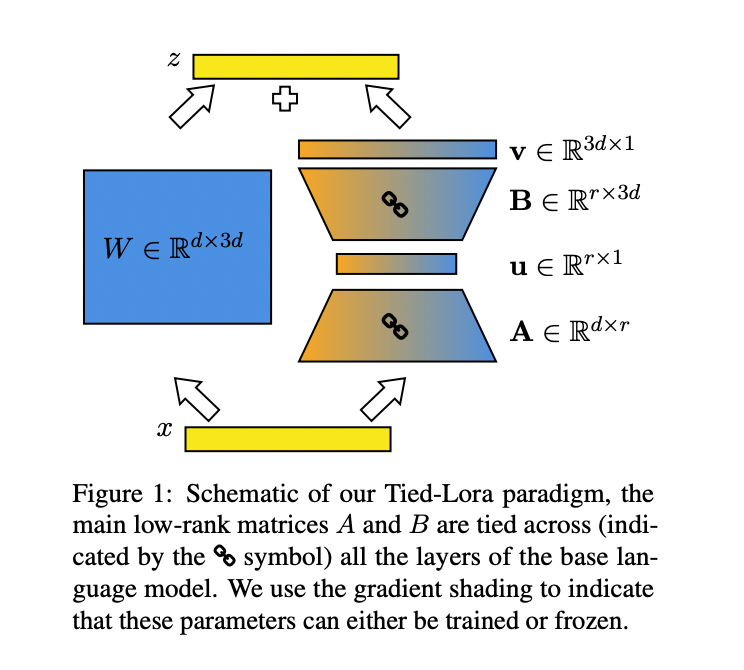

Tied-LoRA is a technique that enhances the parameter effectivity of the LoRA strategy by combining weight tying and selective coaching. It includes making use of weight tying to low-rank matrices in LoRA, sharing the identical penalties throughout layers within the base language mannequin, thereby lowering the variety of trainable parameters. It explores numerous mixtures of parameter coaching/freezing and weight tying to realize an optimum steadiness between efficiency and trainable parameters. The proposed Tied-LoRA configurations are evaluated on numerous duties, demonstrating effectivity throughout knowledge settings, together with translation and mathematical reasoning.

In experiments throughout numerous duties and two base language fashions, totally different Tied-LoRA configurations demonstrated trade-offs between effectivity and efficiency. A particular Tied-LoRA configuration, vBuA, outperformed others, attaining comparable efficiency. vBuA was recognized because the optimum choice, sustaining efficiency whereas lowering parameters by 87%. Evaluations on duties like extractive query answering, summarization, and mathematical reasoning showcased Tied-LoRA’s skill to reinforce parameter effectivity whereas preserving aggressive efficiency considerably.

After conducting experiments throughout numerous duties, it has been discovered that Tied-LoRA is a paradigm that enhances the parameter effectivity of the LoRA methodology by using weight tying and selective coaching. The outcomes counsel that Tied-LoRA can substitute capabilities corresponding to commonsense NLI, extractive QA, and summarization. Furthermore, it affords improved parameter effectivity with out compromising efficiency, using solely 13% of the parameters from commonplace LoRA. Nevertheless, discussing limitations and comparisons with different parameter effectivity strategies is vital to determine potential areas for future exploration.

Take a look at the Paper. All credit score for this analysis goes to the researchers of this challenge. Additionally, don’t neglect to affix our 33k+ ML SubReddit, 41k+ Fb Group, Discord Channel, and Electronic mail Publicationthe place we share the newest AI analysis information, cool AI initiatives, and extra.

In case you like our work, you’ll love our e-newsletter..

![]()

Sana Hassan, a consulting intern at Marktechpost and dual-degree scholar at IIT Madras, is obsessed with making use of know-how and AI to handle real-world challenges. With a eager curiosity in fixing sensible issues, he brings a contemporary perspective to the intersection of AI and real-life options.

↗ Step by Step Tutorial on ‘Easy methods to Construct LLM Apps that may See Hear Communicate’