NVIDIA will likely be gobbling up the entire HBM3e and future-gen HBM4 reminiscence provide for its present and next-gen AI GPUs, with TrendForce’s new report stating NVIDIA will absorb most HBM3, HBM3e, and HBM4 reminiscence over the following few years.

VIEW GALLERY – 3 IMAGES

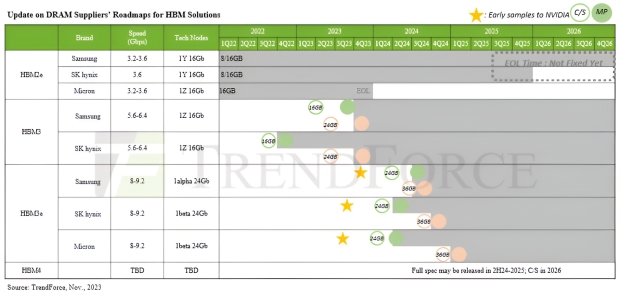

TrendForce’s newest analysis into the HBM market sees NVIDIA planning to diversify HBM suppliers for “extra sturdy and environment friendly provide chain administration”. TrendForce sees NVIDIA shopping for up most of its HBM reminiscence from Samsung as a result of they’re going to be prepared first, whereas SK hynix and Micron may have their new HBM reminiscence prepared within the second half of 2024.

Samsung’s new HBM3 (24GB) is predicted to finish verification with NVIDIA within the coming weeks, whereas its newer HBM3e reminiscence is coming in 8-Hello stacks (24GB) and can attain NVIDIA by the top of July 2024. It is not simply Samsung with HBM reminiscence the place SK hynix may have its HBM3e reminiscence with NVIDIA in mid-August 2024, whereas Samsung will likely be final delivering HBM3e reminiscence to NVIDIA in October 2024.

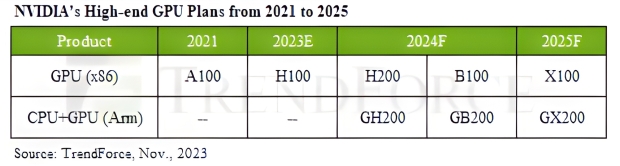

NVIDIA’s current-gen H100 AI GPU makes use of HBM3 reminiscence, whereas the just-announced H200 AI GPU and upcoming B100 AI GPUs in 2024 are utilizing HBM3e reminiscence. NVIDIA additionally has teased its next-next-gen X100 AI GPU on datacenter roadmaps for 2025. NVIDIA is tapping Micron’s new HBM3e reminiscence for its beefed-up H200 AI GPU, with as much as 141GB of HBM3e reminiscence per H200 AI GPU.

The thrust of AI has seen demand for high-speed HBM3, HBM3e, and HBM4 reminiscence for AI GPUs from each AMD and NVIDIA, with AMD’s upcoming Intuition MI300X sequence of AI accelerators that includes as much as 192GB of HBM3 reminiscence. TrendForce reiterates that HBM4 reminiscence will likely be launching in 2026 and would be the first use of a 12nm course of for its bottommost logic die (the bottom die) that will likely be equipped by foundries.

We are able to anticipate HBM4 12-Hello stacks to launch in 2026, whereas the HBM4 16-Hello stacks will likely be launching the 12 months later in 2027.