In 2021, Intel famously declared its objective to get to zettascale supercomputing by 2027, or scaling in the present day’s Exascale computer systems by 1,000 occasions.

Shifting ahead to 2023, attendees stated challenges are scaling up efficiency even inside Exaflops on the Supercomputing 2023 convention, which is being held in Denver.

The transfer to CPU-GPU structure has helped scale efficiency, however different issues — resembling architectural limitations and sustainability points — are making it tough to scale efficiency, officers at Top500 stated.

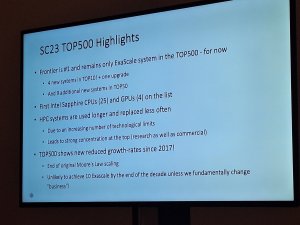

In truth, on the present fee, supercomputers could not attain 10 Exaflops of efficiency by 2030. Additionally, the efficiency development has fallen off the final couple of years regardless of new Exascale programs coming into the Top500 record.

“Until we alter how we method computing, our development sooner or later will be considerably smaller than they’ve been up to now,” stated Erich Strohmaier, cofounder of Top500, throughout a press convention.

The tip of two basic corollaries — Dennard Scaling and Moore’s Regulation — have created challenges in scaling efficiency.

“The tip of Moore’s Regulation is coming, there’s little question about that,” Strohmaier stated.

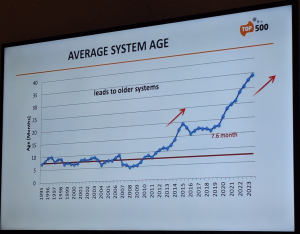

The variety of programs submitted to Top500 has progressively declined since 2017. The common efficiency of programs has additionally been declining during the last couple of years.

The slowdown can be associated to the shortcoming to develop system sizes as a consequence of architectural limitations and sustainability points.

“Our information facilities can’t develop a lot bigger than they’re. So we can’t improve the numbers of … CPU sockets,” Strohmaier stated.

Optical I/O has been recognized as a expertise to assist attain zettascale. Nevertheless, a U.S. Division of Vitality (DoE) official stated that optical I/O was not on their roadmap due to the price and the power required to function optical I/O to attach circuits over brief distances on the motherboard degree. By comparability, copper is affordable and plentiful.

Common HPC programs even have an extended shelf life. The common age of a Top500 system was about 15 months in 2018-2019 and doubled to 30 months in 2023.

The highest seven programs on the November Top500 record have as a lot efficiency because the remaining 493. The upcoming programs will create a good larger divide, with a good larger ratio of efficiency coming from the highest 10 programs.

On the identical time, some thrilling new Exascale machines can be making their approach to the Top500 record. There could also be many lead modifications as a number of supercomputers come on-line and are optimized to carry out quicker.

There are two new programs – Aurora this yr and El Capitan subsequent yr — that would take the highest Top500 positions within the coming years. The programs will scale to 2 Exaflops.

There have been no change within the chief of the Top500 supercomputing record issued this week, with Frontier at Oak Ridge Nationwide Laboratory retaining its prime spot. The system delivered peak efficiency at 1.1 Exaflops of efficiency and remained the one Exascale system on the record.

“I’d say that the machine is absolutely secure proper now, and it’s performing exceptionally properly,” stated Lori Diachin, venture director for Exascale computing venture on the U.S. Division of Vitality.

However Frontier might quickly get replaced by the second-fastest system, Aurora, put in at Argonne Nationwide Laboratory. It delivered a efficiency of 585.34 petaflops and has been partially benchmarked. The system has Intel 4th Gen Xeon server chips known as Sapphire Rapids CPUs and Knowledge Middle GPU Max chips known as Ponte Vecchio.

Argonne submitted benchmarks for half the system dimension, and its efficiency will solely go up when totally benchmarked, stated Erich Strohmaier, cofounder of Top500.

“It’s questionable if Frontier will keep the primary system for for much longer,” Strohmaier stated.

Diachin’s group has had restricted entry to the system since July and is seeing nice efficiency.

“We’re actually wanting ahead to getting full entry to that system, hopefully later this month,” Diachin stated.

The third Exascale supercomputer, El Capitan, can be deployed in mid to late 2024 on the Lawrence Livermore Nationwide Laboratory.

The system will seemingly take the highest spot on Top500 when the benchmark is launched, however it’s not certain when that can occur.

“There’ll be a short early science interval for that machine earlier than it’s turned over to categorized use for stockpile stewardship for the NSA,” Diachin stated.

Moreover, many Top500 class Exaflop programs could also be in plain sight, particularly in cloud amenities of distributors who haven’t bothered to submit the outcomes. Google’s A3 supercomputer can accommodate as much as 26,000 Nvidia H100 GPUs however has not submitted any outcomes.

However one submission, Microsoft’s Azure AI supercomputer known as Eagle, unexpectedly landed within the third spot of this yr’s Top500, and Nvidia’s naked metallic Eos was within the ninth spot.

A previous contributor, China, has gone off the map and isn’t submitting outcomes to Top500. One submission for the Gordon Bell awards is a Chinese language Exascale system, however there have been no submissions of the system’s efficiency to the Top500.

Past uncooked horsepower, DoE’s Diachin can be making an attempt new methods to scale efficiency inside the present {hardware} limitations.

One such thought is utilizing blended precision and a wider implementation of accelerated computing. Additionally, DoE is taking a look at incorporating AI into giant multiphysics fashions and enveloping that into classical computing to achieve quicker outcomes.

“From our perspective, one of many issues we’re actually wanting towards is a few of these algorithmic enhancements and broader incorporation of these sorts of applied sciences to speed up functions whereas protecting the ability footprint manageable,” Diachin stated.

Many labs are additionally taking a look at their previous code written in languages like Fortran 77 and rewriting and recompiling it for accelerated computing environments.

This method “will assist future-proof many of those codes by extracting layers which can be particular to totally different sorts of {hardware} and permitting them to be extra efficiency transportable with much less work,” Diachin stated.

{Hardware} and algorithmic enhancements gave efficiency enhancements principally within the 200x to 300x vary and “as a lot as even a number of 1000 occasions enchancment,” Diachin stated.

The labs usually depend on E4S, or Excessive-scale Scientific Software program, comprising debugging, runtime, math, visualization, and compression instruments. It has greater than 115 packages and is being pushed out to academia, scientific organizations, and different U.S. authorities companies.

Aurora, DOE, E4S, El Capitan, Frontier, H100, Nvidia, Oak Ridge, Ponte Vecchio, Sapphire Rapids, TOP500