Value, ample efficiency, and availability has turn into Intel’s mantra within the AI chip provide wars. Intel’s Gaudi2 accelerator and 4th gen Xeon CPU each posted sturdy showings within the MLPerf Coaching 3.1 benchmark outcomes issued this week. Gaudi2, for instance, delivered on promised positive factors from enabling FP-8 on some workloads. Nvidia’s H100 was nonetheless the highest performer.

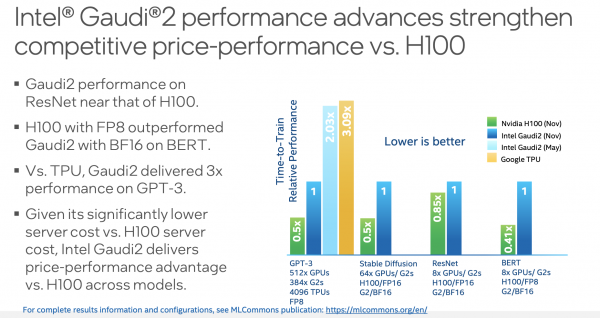

In a briefing with HPCwireEitan Medina, COO, Habana Labs (an Intel firm) “In case you recall, within the final submission again in Might, we stated that can allow FP8 by the second half of this yr, and to our prospects, we stated they need to anticipate 90% enchancment in time-to-train. With this submission outcomes, we’re very proud to have achieved 103% enchancment in slicing down the time to coach (GPT-3) on a cluster of 384Gaudi2.”

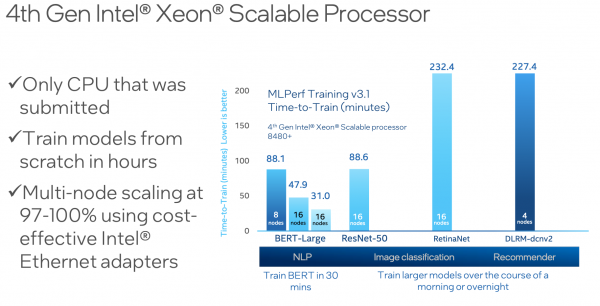

Medina added, “We wish to shine gentle on two details: With the GPT-3 coaching, solely three accelerators have been used for coaching this mannequin at scale. And of those three, are solely – mainly Gaudi2 and Nvidia’s – are service provider silicon. The second level is, as soon as once more, (4th Gen) Xeon is the one CPU that was submitted for MLPerf this time round. Total, we really feel this reveals Intel’s dedication to MLPerf and the precise scalability and the breadth of Intel]options overlaying AI coaching, inference, fine-tuning, mainly throughout the complete spectrum. Google’s captive TPU was third accelerator used with GPT-3 coaching

To a substantial extent, the advertising message is a repeat of Intel’s place outlined in September when each Guadi2 and 4th Gen Xeon carried out nicely on the MLPerf Inference 3.1 benchmark suite, however once more fell wanting Nvidia’s H100 displaying. You recall Intel acquired Habana Labs in 2019. Gaudi2 was launched in Might of 2022 as the primary Intel/Habana product. Medina stated little about Gaudi3 anticipated someday in 2024.

Intel positions Gaudi2 and 4th Gen Xeon as a sort of one-two punch for AI workloads. For smaller-scale, intermittent coaching wants and mannequin fine-tuning, 4th Gen fills the invoice properly and economically, argues Intel. The 4th Gen Xeon matrix multiply capabilities and mixed-precision capabilities are ample. For big scale-training, the place devoted accelerators are wanted, Gaudi is a stable providing, says Intel although performing roughly .5x of Nvidia H100 on many roles.

Nvidia submitted outcomes utilizing very massive system and was clearly the dominant GPU in MLPerf Coaching 3.1. Intel offered normalized efficiency knowledge to make its case.

“As you’ll be able to see a Gaudi2 delivered round double the coaching time of H100 on GPT-3, and you may see the opposite leads to evaluating for Steady Diffusion, BERT and ResNet,” stated Medina. Word that Gaudi2 used BF16 on the opposite exams and the info proven by Intel is for H100 in FP16 mode. Medina stated, “This positions Gaudi to a wonderful answer that may be aggressive on price-performance versus H100. In case you examine what’s the Gaudi2 server value with OEMs that supply Gaudi2 methods, one can find that Gaudi2 options for an enterprise buyer when it comes to value – let’s say a bi-eight server – is a small fraction is a small fraction of the of the H100.”

There have been some fascinating feedback made final week by Addison Snell (CEO) and Dan Olds (CRO) within the annual Inersect360 Analysis pre-SC23 HPC-AI market replace. Gaudi didn’t present up nicely within the Intersect360 Analysis annual consumer survey on questions on deliberate CPU/Accelerator use. Olds questioned if it was a provide drawback.

“It’s not a provide drawback,” stated Medina, “however I agree with you that advertising has not been as efficient.” This altering, stated Medina. “We’re working exhausting, inside Intel and naturally with our companions, to enhance that scenario. The outcomes that we’ve introduced ahead are very, very compelling. So, we do see a major shift in in sentiment and truly very sturdy demand indicators for Gaudi and there have been, truly, very public bulletins on a design wins for Gaudi, on massive installments.”

Listed below are a number of of latest Gaudi2 and Xeon design wins the corporate has disclosed:

- “Intel introduced a big AI supercomputer will probably be constructed totally on Intel Xeon processors and 4,000 Intel Gaudi2 AI {hardware} accelerators, with Stability AI because the anchor buyer.

- “Dell Applied sciences and Intel are collaborating to supply AI options to fulfill prospects wherever they’re on their AI journey. PowerEdge methods with Xeon and Gaudi will help AI workloads starting from large-scale coaching to base-level inferencing.

- “Alibaba Cloud has reported 4th Gen Xeon as a viable answer for real-time massive language mannequin (LLM) inference in its model-serving platform DashScope, with 4th Gen Xeon attaining a 3x acceleration in response time due to its built-in Intel Superior Matrix Extensions (Intel AMX) accelerators and different software program optimizations.”

Intel additionally confirmed knowledge for 4th Gen Xeon submission, once more emphasizing it’s the one CPU performing coaching in MLPerf.

Not a lot was stated about Gaudi3 past it’s in growth. There’s the expectation that Intel will say rather more about its chip roadmap, together with accelerators, throughout SC so stayed tuned. Presently Gaudi2 makes use of a growth suite that’s separate the Intel oneAPI platform used for different Intel processors.

Presently, Intel provides Gaudi2 as a mezzanine card. “We’ve chosen to make use of an ordinary OCP type issue. It’s pretty related in dimension (to others) and goes into servers which might be usually populated with eight accelerators. That is the server type issue that it’s best for AI, particularly,” stated Ronak Shah, AI Product Director, Xeon, Intel.

Hyperlink to Intel press launch, https://www.hpcwire.com/off-the-wire/intel-gaudi-ai-accelerator-gains-2x-performance-leap-on-gpt-3-with-fp8-software/

Hyperlink to MLPerf Coaching 3.1 press launch, https://www.hpcwire.com/off-the-wire/new-mlperf-training-and-hpc-benchmark-results-showcase-49x-performance-gains-in-5-years/