The Gigabyte R183-Z95 is a very cool 1U server. Inside there are two AMD EPYC 9004 sequence processors, however that’s solely the start. There’s room for 4 various kinds of storage to be plugged immediately into the system with out utilizing any HBAs or RAID controllers. On this assessment, we’re going to see what’s totally different, particularly with the E1.S EDSFF SSD bays. Allow us to get to it!

Gigabyte R183-Z95 Exterior Overview

The system itself is a 1U server that’s 815mm or simply over 32 inches deep. From the entrance of the server, you possibly can see there’s something totally different about this one.

The left facet, however not the left rack ear, has the USB 3 Kind-A port in addition to the standing LEDs and energy buttons. We don’t see this typically lately as a result of entrance panel house is often at a premium.

Subsequent to that’s an array of eight 2.5″ drive bays. Normally, the height density is twelve 2.5″ entrance drive bays. The draw back to that configuration is that cooling turns into a problem for your entire system and the drive trays are often not as sturdy. Right here, Gigabyte is giving us loads of storage, whereas utilizing bigger trays and extra space for higher airflow.

The trick to getting 14x entrance panel drives is utilizing EDSFF, or extra particularly six E1.S drive bays.

You will have seen our EDSFF overview.

That was accomplished within the early days of EDSFF. Now, we’ve many drive producers and programs that take varied codecs. Since we had two programs the place we would have liked the drives, after I was in California for the latest Contained in the Secret Information Middle on the Coronary heart of the San Francisco 49ers Levis Stadium piece, I finished in at Kioxia and borrowed a couple of drives to make use of right here.

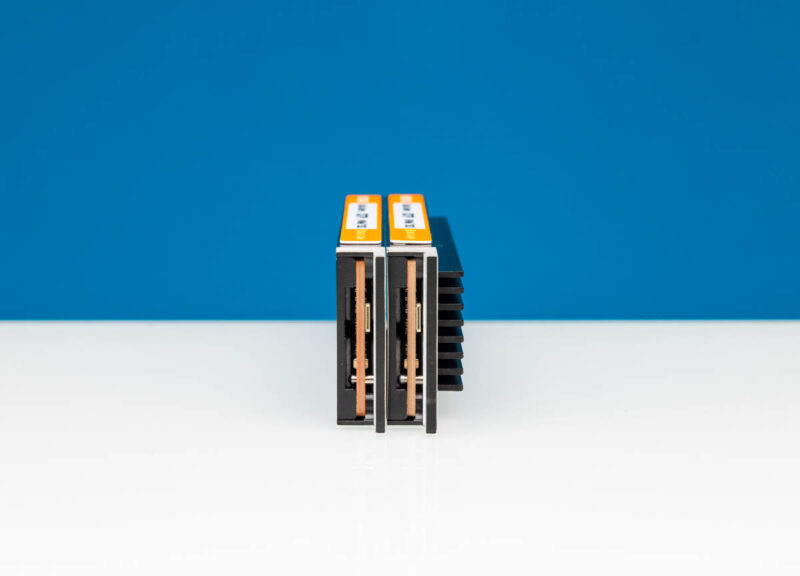

We now have each Kioxia XD6 and Kioxia XD7P drives right here.

One thing that’s enjoyable with EDSFF, is that the server makers, or allegedly some well-known OEMs, determined that as an alternative of creating the latching mechanism part of the spec, they as an alternative wished their very own drive carriers as they’ve with 2.5″ drives. So the server wants to return with the little latching provider to suit a server, and that’s what we’ve right here. It’s secured by two screws and could be very small. This is likely one of the silliest issues in servers at present.

Gigabyte offers blanks, so it’s fairly simple to put in the latches onto drives.

One thing else that issues is the dimensions. This server particularly takes E1.S 9.5mm drives (and smaller.) When you’ve got a bigger 15mm E1.S drive, with a heatsink for thermal administration, then you’re at minimal not getting to make use of a full six drive bays right here.

Nonetheless utilizing 9.5mm drives, we are able to add six E1.S drives plus the eight 2.5″ NVMe SSDs for a complete of fourteen on the entrance of the chassis.

Here’s a fast have a look at the XD7P in 9.5mm and 15mm so you possibly can inform the distinction simply.

Transferring to the rear of the server, we’ve a reasonably normal format for folk that wish to run A+B sides of racks for each energy and networking.

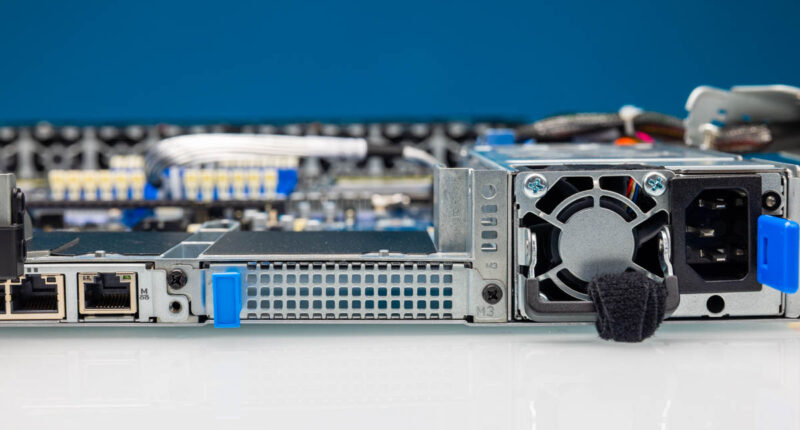

The ability provides at both facet are LiteOn 1.6kW items. Having energy provides at both facet permits a PDU to be related on both facet for A+B redundant energy.

Subsequent are the risers on both facet of the rear. These are PCIe Gen5 x16 full-height risers and could be eliminated with a single thumbscrew. They’re simple to work on since they’re cabled.

Beneath these risers, we’ve OCP NIC 3.0 slots.

These are for A+B networking on all sides of the chassis. Gigabyte is utilizing the SFF with pull tab design, which is the simplest to service.

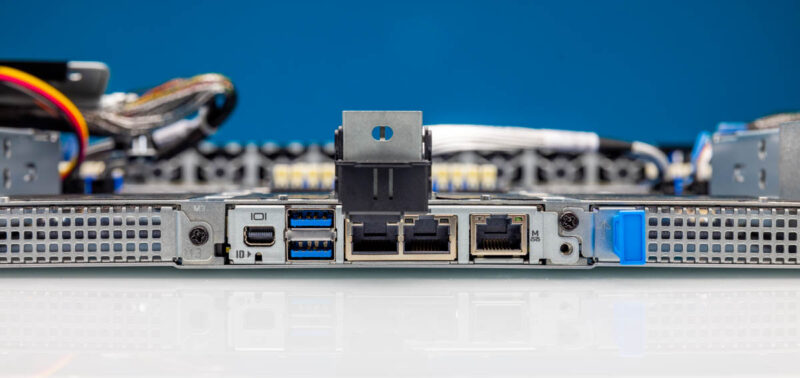

Within the rear, we get one thing totally different. We get a mini DisplayPort video output as an alternative of VGA. Then we get two normal USB 3 Kind-A ports. Lastly we get three NICs, two are Intel i350-AM2 ports. That may be a greater high quality 1GbE NIC than the i210 which is a pleasant contact.

We additionally get the out-of-band administration port. The NIC and the ASPEED BMC are each on an OCP NIC 3.0 type issue card within the center slot.

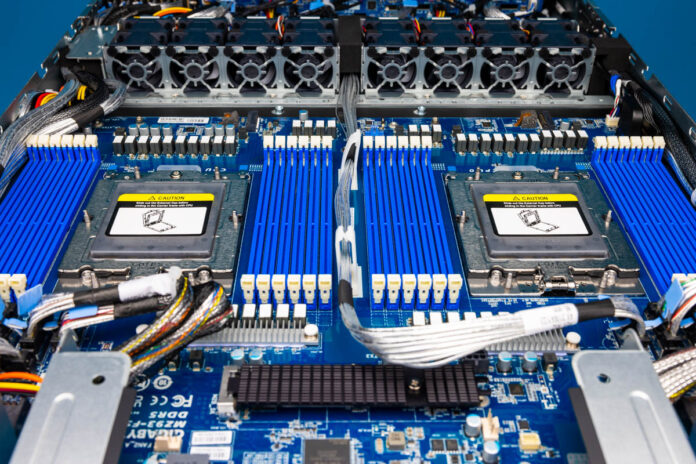

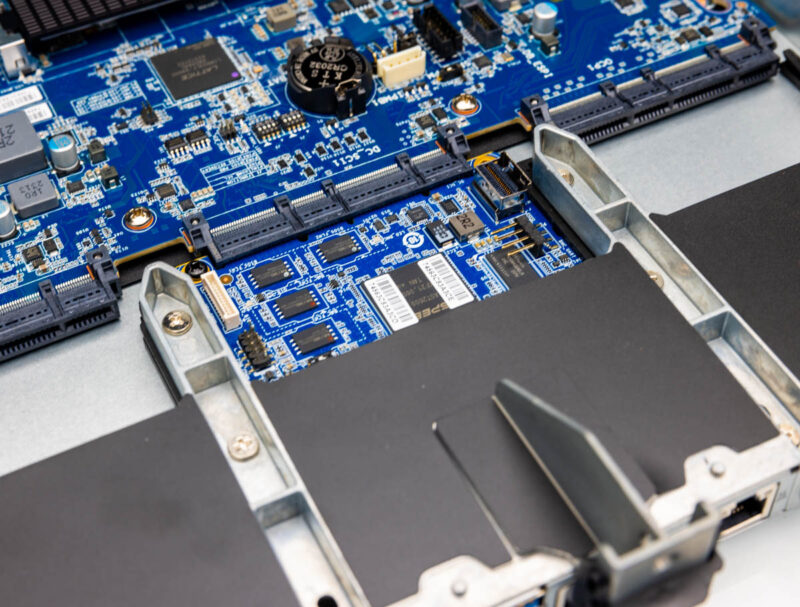

Subsequent, allow us to get contained in the system to see the way it works.