As generative AI and enormous language fashions (LLMs) proceed to drive improvements, compute necessities for coaching and inference have grown at an astonishing tempo.

To fulfill that want, Google Cloud right this moment introduced the overall availability of its new A3 cases, powered by NVIDIA H100 Tensor Core GPUs. These GPUs carry unprecedented efficiency to all types of AI functions with their Transformer Engine — purpose-built to speed up LLMs.

Availability of the A3 cases comes on the heels of NVIDIA being named Google Cloud’s Generative AI Associate of the 12 months — an award that acknowledges the businesses’ deep and ongoing collaboration to speed up generative AI on Google Cloud.

The joint effort takes a number of varieties, from infrastructure design to in depth software program enablement, to make it simpler to construct and deploy AI functions on the Google Cloud platform.

On the Google Cloud Subsequent convention, NVIDIA founder and CEO Jensen Huang joined Google Cloud CEO Thomas Kurian for the occasion keynote to have a good time the overall availability of NVIDIA H100 GPU-powered A3 cases and discuss how Google is utilizing NVIDIA H100 and A100 GPUs for inside analysis and inference in its DeepMind and different divisions.

Throughout the dialogue, Huang pointed to the deeper ranges of collaboration that enabled NVIDIA GPU acceleration for the PaxML framework for creating large LLMs. This Jax-based machine studying framework is purpose-built to coach large-scale fashions, permitting superior and totally configurable experimentation and parallelization.

PaxML has been utilized by Google to construct inside fashions, together with DeepMind in addition to analysis tasks, and can use NVIDIA GPUs. The businesses additionally introduced that PaxML is offered instantly on the NVIDIA NGC container registry.

Generative AI Startups Abound

At present, there are over a thousand generative AI startups constructing next-generation functions, many utilizing NVIDIA know-how on Google Cloud. Some notable ones embrace Author and Runway.

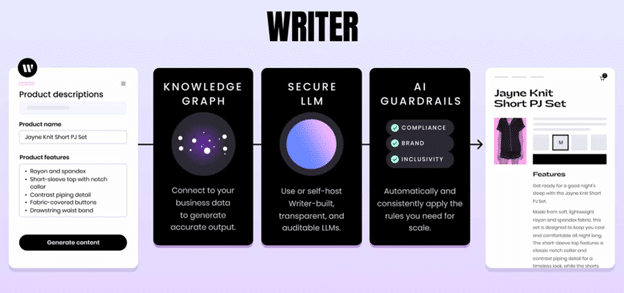

Author makes use of transformer-based LLMs to allow advertising groups to shortly create copy for internet pages, blogs, advertisements and extra. To do that, the corporate harnesses NVIDIA NeMo, an utility framework from NVIDIA AI Enterprise that helps firms curate their coaching datasets, construct and customise LLMs, and run them in manufacturing at scale.

Utilizing NeMo optimizations, Author builders have gone from working with fashions with a whole lot of hundreds of thousands of parameters to 40-billion parameter fashions. The startup’s buyer record consists of family names like Deloitte, L’Oreal, Intuit, Uber and plenty of different Fortune 500 firms.

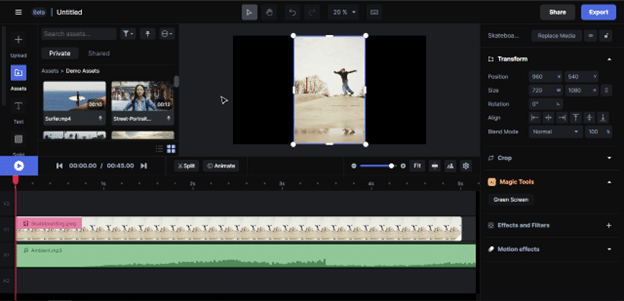

Runway makes use of AI to generate movies in any model. The AI mannequin imitates particular kinds prompted by given photographs or by means of a textual content immediate. Customers may also use the mannequin to create new video content material utilizing current footage. This flexibility allows filmmakers and content material creators to discover and design movies in a complete new means.

Google Cloud was the primary CSP to carry the NVIDIA L4 GPU to the cloud. As well as, the businesses have collaborated to allow Google’s Dataproc service to leverage the RAPIDS Accelerator for Apache Spark to supply important efficiency boosts for ETL, accessible right this moment with Dataproc on the Google Compute Engine and shortly for Serverless Dataproc.

The businesses have additionally made NVIDIA AI Enterprise accessible on Google Cloud Market and built-in NVIDIA acceleration software program into the Vertex AI growth atmosphere.

Discover extra particulars about NVIDIA GPU cases on Google Cloud and the way NVIDIA is powering generative AI. Join generative AI information to remain updated on the newest breakthroughs, developments and applied sciences. And browse this technical weblog to see how organizations are operating their mission-critical enterprise functions with NVIDIA NeMo on the GPU-accelerated Google Cloud.