The scarcity of Nvidia’s GPUs has prospects looking for scrap heap to kickstart makeshift AI tasks, and Intel is benefitting from it. Clients in search of fast implementation of AI tasks are alternate options to GPUs, mentioned David Zinsner, Intel’s chief monetary officer, throughout a gathering with monetary analysts on the Citi International Know-how Convention this week.

“The challenges in getting GPUs — I feel we see extra prospects looking at Gaudi instead. And as well as, the value factors are higher and extra engaging,” Zinsner mentioned.

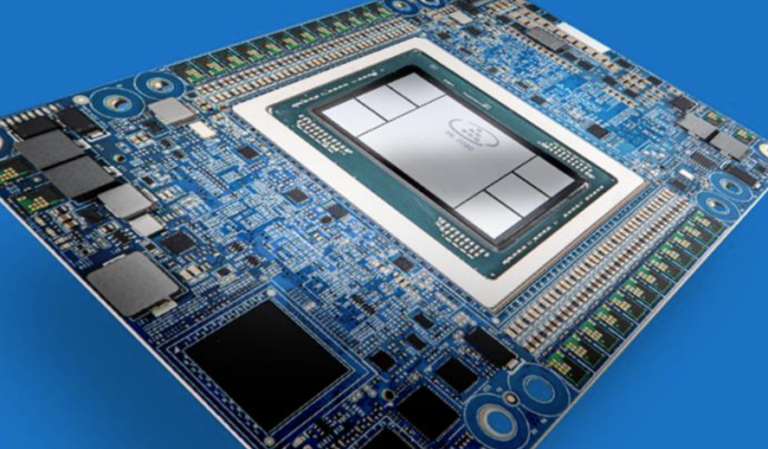

Zinsner additionally remarked that Gaudi and Gaudi2 chips are transport, with Gaudi3 popping out in a “cheap time period.”

Throughout an earnings name final month, CEO Pat Gelsinger mentioned the corporate has a $1 billion pipeline for AI chips. Zinsner mentioned the pipeline is unrelated to AI chip orders secured by Intel however to “any buyer that we may probably see some enterprise from who’s expressed some curiosity — we’re counting that then needs to be transformed to actual {dollars}.”

Intel is taken into account a few years behind GPU expertise in comparison with Nvidia, which reported income of $13.51 billion in its most up-to-date quarter and was up 101% from the year-ago quarter. Nvidia’s AI breakthroughs have made it a market chief and left Intel and AMD within the mud. Nvidia’s market cap is $1.14 trillion, whereas Intel’s is $160 billion.

“There’s a necessity for GPUs to do this [AI] work. I feel we’re a beneficiary of that due to the CPU that we’ve got,” Zinsner mentioned.

Many Nvidia H100 GPU installations are alongside Intel’s Sapphire Rapids chip, which helps DDR5 reminiscence. Nevertheless, a bigger quantity of {dollars} are going to GPUs for giant language fashions, which is able to proceed to harm Intel’s income within the coming quarters.

“That takes a little bit little bit of a wind out of the gross sales of our information heart enterprise and is a part of why we expect Q3 three and This fall 4 can be extra muted than they’ve been prior to now,” Zinsner mentioned. Trying forward, Intel is constructing a aggressive AI product lineup to supply some competitors to Nvidia.

“That can actually be our story in 2024 — driving Gaudi along with CPUs. In the end, we’ll have our Falcon Shore GPU product out in 2025. Loads of that’s constructing the software program ecosystem,” Zinsner mentioned. Intel is making an attempt to scale back the confusion round its quickest AI chips by merging the discrete Gaudi AI chips into the Falcon Shores GPU.

“What’s going to occur is Gaudi will converge with Falcon Shores,” Zinsner mentioned, including, “There can be one product providing.” That can pack Intel’s quickest AI chips right into a single product providing, lowering the confusion on whether or not prospects ought to construct their AI computing round Gaudi or Falcon Shores.

The final-purpose Falcon Shores with built-in Gaudi options are being positioned as a contest to Nvidia’s GPUs. Just like the H100, Falcon Shores helps common computing but in addition has low-precision compute features focusing on drawing conclusions from patterns and developments in information.

The Gaudi lineup can also be focused at prospects seeking to construct AI rigs in-house or in managed environments and never within the cloud, Zinsner mentioned.

“As you look past the actually huge parameter coaching that requires scrubbing the entire Web, and also you begin to take a look at it in a extra contained atmosphere, notably in on-prem searches, it has positively obtained an actual efficiency stage that’s equal to what you see from rivals,” Zinsner mentioned.

Gaudi nonetheless must generate significant income from AI and will nonetheless be a few years away. Clients are nonetheless testing the chips earlier than committing to purchases.

Firms like Supermicro are providing servers with Gaudi chips. Intel has additionally began transport modified Gaudi2 chips to Chinese language server makers.

However utilizing Gaudi is extra concerned than shopping for it off the shelf and placing it to work. The software program stack and fashions should be tailored to run the quickest on Gaudi chips, which is an ASIC (application-specific built-in chip). ASICs sometimes run fastened duties and don’t have flexibility.

The Gaudi chips additionally usually are not simply out there to check within the cloud. It’s out there within the Intel Developer Cloud, on Genesis, and in an AWS occasion. Huggingface hosts a Gaudi2 optimized for the 176-billion parameter Bloomz mannequin, which limits its utilization.

By comparability, Nvidia GPUs are simpler to run. Most fashions are tailored to run alongside CuDNN (CUDA Deep Neural Networks), which is the underlying library that drives AI implementations on its A100 and H100 chips. Intel will get there with Falcon Shore, which is a general-purpose chip.

In consequence, prospects usually are not lining up at Intel to purchase the Gaudi chips. As a substitute, Intel is reaching out and proving the worth of Gaudi chips to prospects.

“This can be a kind of issues the place it simply has to construct momentum. Each quarter … we will construct an earnings and are seeing power. I feel subsequent 12 months it is going to be a fabric quantity,” Zinsner mentioned. Zinsner gave the instance of how Intel stood up a Gaudi AI computing atmosphere for Boston Consulting Group, which needed to implement a skilled large-language mannequin for search inside a safe atmosphere.

“I feel we did that in about 12 weeks from the time we began speaking to Boston Consulting,” Zinsner mentioned. “They’re seeing an actual alternative to leverage this with their buyer base.” Intel nonetheless views AI as a workload with completely different usages within the cloud, on-premise, and on the sting. Nevertheless, the larger AI alternative for the corporate is outdoors the cloud.

“As you progress farther away from the cloud, the alternatives get greater as a result of the structure that works for cloud service suppliers shouldn’t be the structure that works for an enterprise buyer when it comes to the fee … and energy utilization of those methods,” Zinsner mentioned.

Intel can use the top-bottom method of determining a buyer’s {hardware} necessities and construct AI {hardware} and software program into the gross sales, Zinsner mentioned.

Intel is predicted to focus completely on AI at its upcoming Intel Innovation convention, which is being held later this month in San Jose.

Academia & Analysis, Vitality, Leisure, Monetary Companies, Authorities, Life Sciences, Manufacturing, Oil & Gasoline, Retail, semiconductor, Area & Physics, Climate & Local weather