- Microsoft unveils two new chips to spice up its AI infrastructure.

- Microsoft additionally extends partnerships with AMD and Nvidia.

- Can it navigate its newest personnel points to push its AI agenda?

It’s been a busy week for Microsoft. Not solely has the tech large lastly launched its personal chips to empower AI infrastructure, however the firm additionally needed to cope with the drama unfolding at OpenAI.

Sam Altman, CEO of OpenAI, has been sacked and nonetheless stays sacked by the board. Since then, all the large information from Microsoft’s Ignite occasion has been overshadowed. It’s a giant blow not simply to OpenAI however to Microsoft itself, particularly given the success OpenAI has had below Altman’s management.

In his keynote handle at Ignite, Satya Nadella, Microsoft CEO stated that as OpenAI innovates, Microsoft will ship all of that innovation as a part of Azure AI, by including extra to its catalog. This contains the brand new fashions as a service (MaaS) providing in Azure.

MaaS is a pay-as-you-go inference APIs and hosted fine-tuning for Llama 2 in Azure AI mannequin catalog. Microsoft is increasing its partnership with Meta to supply Llama 2 as the primary household of Giant Language Fashions (LLM) by means of MaaS in Azure AI Studio. MaaS makes it straightforward for Generative AI builders to construct LLM apps by providing entry to Llama 2 as an API.

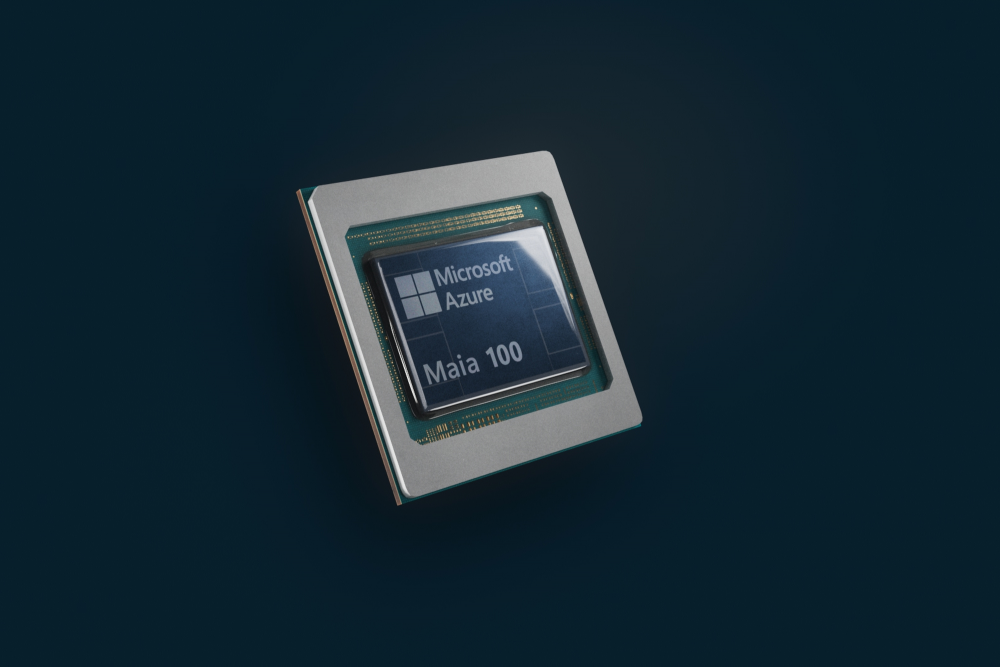

The Microsoft Azure Maia AI Accelerator is the primary designed by Microsoft for giant language mannequin coaching and inferencing within the Microsoft Cloud. (Picture by Microsoft).

Sam Altman joins Microsoft

Whereas Altman was not current on the Ignite occasion, a report from Bloomberg stated that executives at Microsoft have been taken without warning by the announcement. One individual particularly who was left blindsided and livid by the information was Nadella.

As Microsoft is OpenAI’s greatest investor, Nadella is enjoying a central position in negotiations with the board of plans to carry Altman again. However it appears Altman’s ship could have sailed away.

It could nonetheless be early days, however the choice to take away Altman could not solely influence the way forward for OpenAI however might additionally trigger ripples on how Microsoft strikes ahead with its large AI plans.

The Info reported that Microsoft, OpenAI’s greatest backer, is contemplating taking a task on the board if ousted CEO Sam Altman returns to the ChatGPT developer, in accordance with two individuals acquainted with the talks. Microsoft might both sit down on OpenAI’s board of administrators, or as a board observer with out voting energy, one of many sources stated.

Nonetheless, within the newest improvement, Nadella tweeted that Altman will now be part of Microsoft as a substitute.

“We stay dedicated to our partnership with OpenAI and believe in our product roadmap, our means to proceed to innovate with all the things we introduced at Microsoft Ignite, and in persevering with to help our prospects and companions,” Nadella tweeted.

Nadella’s tweet confirming Altman has joined Microsoft.

New Microsoft chips completes AI puzzle

Going again to Microsoft Ignite, the corporate introduced two new chips to assist enhance the corporate’s AI infrastructure. Given the rising demand for extra AI workloads, the info facilities processing these requests want to have the ability to address the calls for. Meaning having chips that may energy the info heart and meet buyer wants.

Whereas Microsoft is already in partnership with AMD and Nvidia for chips, the 2 new chips introduced by the corporate could be the ultimate piece Microsoft must be totally in charge of its AI infrastructure provide chain.

The 2 new chips are:

- The Microsoft Azure Maia AI Accelerator – optimized for AI duties and generative AI. Designed particularly for the Azure {hardware} stack, the alignment of chip design with the bigger AI infrastructure designed with Microsoft’s workloads in thoughts can yield big positive aspects in efficiency and effectivity.

- The Microsoft Azure Cobalt CPU – an Arm-based processor tailor-made to run general-purpose compute workloads on the Microsoft Cloud. It’s optimized to ship larger effectivity and efficiency in cloud-native choices. The chip additionally goals to optimize Microsoft’s “efficiency per watt” all through its knowledge facilities, which primarily means getting extra computing energy for every unit of vitality consumed.

The chips symbolize the final puzzle piece for Microsoft in relation to delivering infrastructure methods – which embody all the things from silicon selections, software program and servers to racks and cooling methods – which were designed from prime to backside and might be optimized with inside and buyer workloads in thoughts.

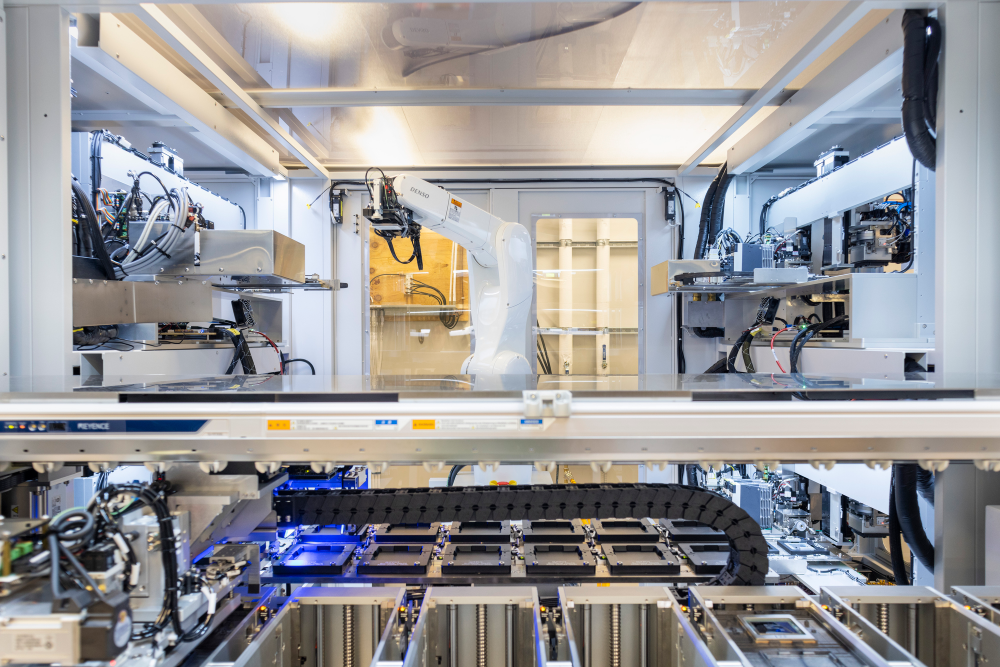

A system degree tester at a Microsoft lab in Redmond, Washington, mimics circumstances {that a} chip will expertise inside a Microsoft knowledge heart. (Picture by Microsoft).

In response to Omar Khan, basic supervisor for Azure product advertising at Microsoft, Maia 100 is the primary technology within the collection, with 105 billion transistors, making it one of many largest chips on 5nm course of know-how. The improvements for Maia 100 span throughout the silicon, software program, community, racks, and cooling capabilities. This equips the Azure AI infrastructure with end-to-end system optimization tailor-made to fulfill the wants of groundbreaking AI akin to GPT.

In the meantime, Cobalt 100, the primary technology in its collection too, is a 64-bit 128-core chip that delivers as much as 40% efficiency enchancment over present generations of Azure Arm chips and is powering companies akin to Microsoft Groups and Azure SQL.

“Networking innovation runs throughout our first-generation Maia 100 and Cobalt 100 chips. From hole core fiber know-how to the basic availability of Azure Enhance, we’re enabling sooner networking and storage options within the cloud. Now you can obtain as much as 12.5 GBs throughput, 650K input-output operations per second in distant storage efficiency to run data-intensive workloads, and as much as 200 GBs in networking bandwidth for network-intensive workloads,” stated Khan.

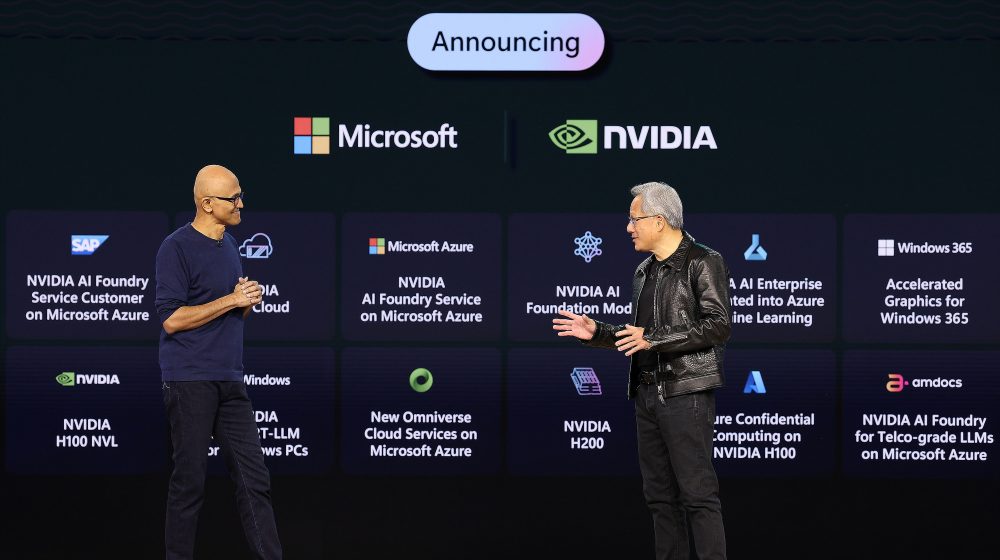

Chairman and CEO Satya Nadella and Nvidia founder, president and CEO Jensen Huang, at Microsoft Ignite 2023.(Supply – Microsoft)

Chips for all

Microsoft joins an inventory of different firms which have determined to construct their very own chips, particularly given the rising prices of chips in addition to the prolonged wait time to get them. In reality, most main tech firms have introduced plans to construct their very own chips to help the rising demand and use circumstances being developed.

For instance, OpenAI had beforehand introduced plans to have its personal chips, regardless of relying closely on Nvidia. In reality, Bloomberg reported that Sam Altman was actively searching for traders to get this deal going. Altman deliberate to spin up an AI-focused chip firm that might produce semiconductors that competed in opposition to these from Nvidia Corp., which at present dominates the marketplace for synthetic intelligence duties.

Different firms which can be additionally designing and producing their very own chips embody Apple, Amazon, Meta, Tesla and Ford. Every of the chips is designed to cater to the corporate’s use circumstances.

Regardless of unveiling two new chips for AI, Microsoft additionally introduced extensions to its partnerships with Nvidia and AMD. Azure works intently with Nvidia to offer Nvidia H100 Tensor Core (GPU) graphics processing unit-based digital machines (VMs) for mid to large-scale AI workloads, together with Azure Confidential VMs.

On prime of that, Khan acknowledged that Microsoft is including the newest Nvidia H200 Tensor Core GPU to its fleet subsequent yr to help bigger mannequin inferencing with no discount in latency. With AMD, Khan acknowledged that Microsoft prospects can entry AI-optimized VMs powered by AMD’s new MI300 accelerator early subsequent yr.

“These investments have allowed Azure to pioneer efficiency for AI supercomputing within the cloud and have constantly ranked us because the primary cloud within the prime 500 of the world’s supercomputers. With these additions to the Azure infrastructure {hardware} portfolio, our platform permits us to ship the most effective efficiency and effectivity throughout all workloads,” Khan acknowledged.