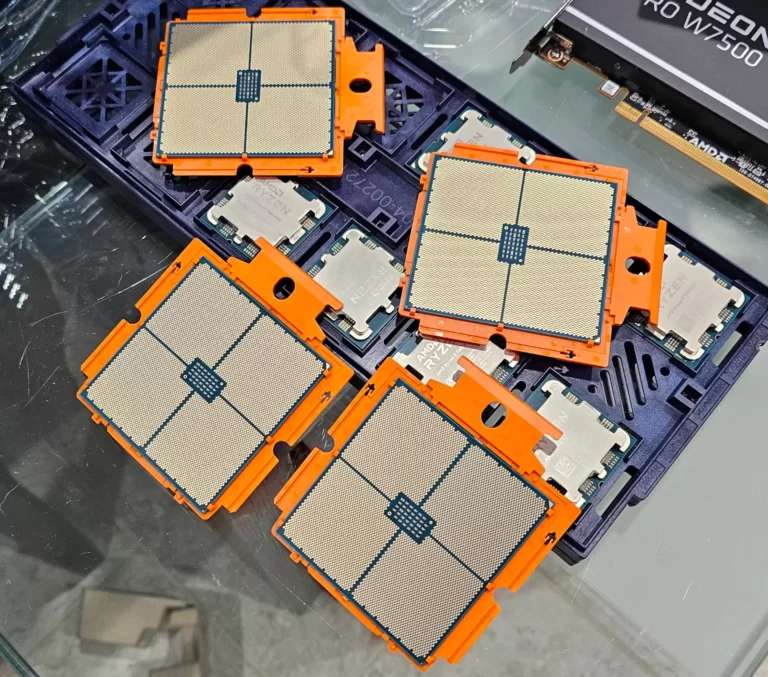

Along with the EEVDF scheduler changing the CFS code in Linux 6.6, one other elementary and attention-grabbing change with Linux 6.6 is on the workqueue (WQ) aspect with a rework that may profit programs with a number of L3 caches like fashionable AMD chiplet-based programs.

With the workqueue modifications for Linux 6.6, unbound workqueues now help extra versatile affinity scopes. Tejun Heo defined in that pull:

“The default conduct is to soft-affine in accordance with final degree cache boundaries. A piece merchandise queued from a given LLC is executed by a employee working on the identical LLC however the employee could also be moved throughout cache boundaries because the scheduler sees match. On machines which a number of L3 caches, that are gaining popularity together with chiplet designs, this improves cache locality whereas not harming work conservation an excessive amount of.

Unbound workqueues at the moment are additionally much more versatile by way of execution affinity. Differeing ranges of affinity scopes are supported and each the default and per-workqueue affinity settings might be modified dynamically. This could assist working round amny of sub-optimal behaviors noticed just lately with uneven ARM CPUs.

This concerned signficant restructuring of workqueue code. Nothing was reported but however there’s some danger of delicate regressions. Ought to hold a watch out.”

The patch sequence when this code was being labored on add extra context:

“Unbound workqueues used to spray work objects inside every NUMA node, which is not nice on CPUs w/ a number of L3 caches. This patchset implements mechanisms to enhance and configure execution locality.

…

This has been largely positive however CPUs turned much more complicated with many extra cores and a number of L3 caches inside a single node with [differing] distances throughout them, and it appears to be like prefer it’s excessive time to enhance workqueue’s locality consciousness.

…

Ryzen 9 3900x – 12 cores / 24 threads unfold throughout 4 L3 caches. Core-to-core latencies throughout L3 caches are ~2.6x worse than inside every L3 cache. ie. it is worse however not massively so. Because of this the affect of L3 cache locality is noticeable in these experiments however could also be subdued in comparison with different setups.”

The patch sequence is promising and ought to be attention-grabbing to see how this code, which is a part of Linux 6.6 pans out.

With the entire attention-grabbing modifications constructed up for the Linux 6.6 merge window, which culminates in the present day with the Linux 6.6-rc1 launch, I will be benchmarking many programs over the times forward in wanting on the Linux 6.6 efficiency on AMD and Intel programs in comparison with prior kernel releases.