Within the considerably advancing discipline of Synthetic Intelligence (AI) and Machine Studying (ML), creating clever methods that easily align with human preferences is essential. The event of Giant Language Fashions (LLMs), which search to mimic people by producing content material and answering questions like a human, has led to huge reputation in AI.

SteerLM, which has been lately launched as a method for supervised fine-tuning, provides finish customers extra management over mannequin responses throughout inference. In distinction to conventional strategies like Reinforcement Studying from Human Suggestions (RLHF), SteerLM makes use of a multi-dimensional assortment of expressly acknowledged qualities. This provides customers the flexibility to direct AI to supply responses that fulfill preset requirements, corresponding to helpfulness, and permit customization primarily based on specific necessities.

The criterion differentiating extra useful responses from much less useful ones is just not well-defined within the open-source datasets at the moment out there for coaching language fashions on helpfulness preferences. Because of this, fashions educated on these datasets generally unintentionally be taught to favor particular dataset artifacts, corresponding to giving longer responses extra weight than they really have, even when these responses aren’t that useful.

To beat this problem, a group of researchers from NVIDIA has launched a dataset referred to as HELPSTEER, an in depth compilation created to annotate many parts that affect how useful responses are. This dataset has a big pattern dimension of 37,000 samples and has annotations for verbosity, coherence, accuracy, and complexity. It additionally has an general helpfulness ranking for each response. These traits transcend an easy length-based choice to supply a extra nuanced view of what constitutes a really useful response.

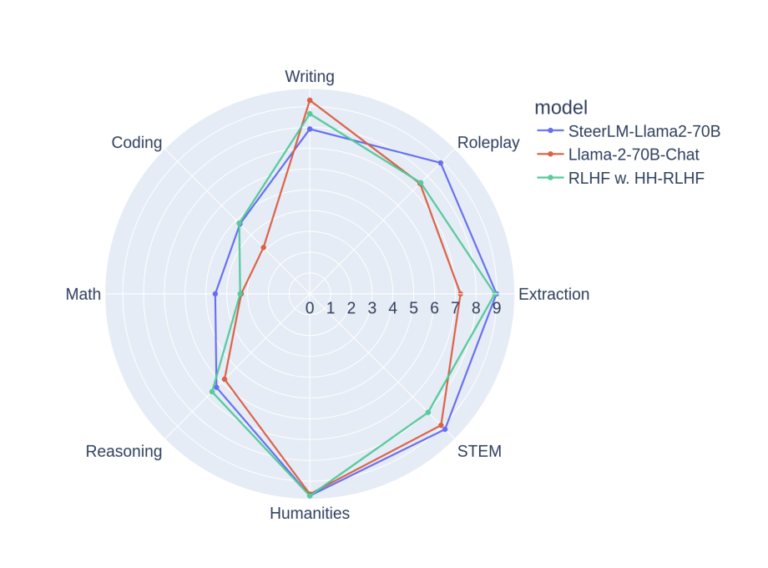

The group has used the Llama 2 70B mannequin with the STEERLM method to coach language fashions effectively on this dataset. The ultimate mannequin has outperformed all different open fashions with out utilizing coaching information from extra advanced fashions corresponding to GPT-4, reaching a excessive rating of seven.54 on the MT Bench. This demonstrates how properly the HELPSTEER dataset works to enhance language mannequin efficiency and resolve points with different datasets.

The HELPSTEER dataset has been made out there by the group to be used underneath the Worldwide Artistic Commons Attribution 4.0 Licence. This publicly out there dataset can be utilized by language researchers and builders to proceed the event and testing of helpfulness-preference-focused language fashions. The dataset will be accessed on HuggingFace at https://huggingface.co/datasets/nvidia/HelpSteer.

The group has summarized their major contributions as follows,

- A 37k-sample helpfulness dataset has been developed consisting of annotated responses for accuracy, coherence, complexity, verbosity, and general helpfulness.

- Llama 2 70B has been educated utilizing the dataset, and it has achieved a number one MT Bench rating of seven.54, outperforming fashions that don’t depend on personal information, together with GPT4.

- The dataset has been made publicly out there underneath a CC-BY-4.0 license to advertise neighborhood entry for additional research and improvement primarily based on the findings.

In conclusion, the HELPSTEER dataset is a good introduction because it bridges a major void in at the moment out there open-source datasets. The dataset has demonstrated efficacy in educating language fashions to present priority to traits corresponding to accuracy, consistency, intricacy, and expressiveness, resulting in enhanced outcomes.

Try the Paper and Dataset. All credit score for this analysis goes to the researchers of this venture. Additionally, don’t overlook to affix our 33k+ ML SubReddit, 41k+ Fb Group, Discord Channel, and E-mail Publicationthe place we share the most recent AI analysis information, cool AI tasks, and extra.

Should you like our work, you’ll love our publication..

Tanya Malhotra is a closing 12 months undergrad from the College of Petroleum & Power Research, Dehradun, pursuing BTech in Laptop Science Engineering with a specialization in Synthetic Intelligence and Machine Studying.

She is a Information Science fanatic with good analytical and important pondering, together with an ardent curiosity in buying new abilities, main teams, and managing work in an organized method.