Justin Sullivan

Nvidia Corporation’s (NASDAQ:NVDA) most recent quarterly earnings report had once again crushed market expectations, while offering jaw-dropping Q3 2024 earnings guidance of around $16 billion in revenue. While the results are undoubtedly impressive, skeptics are questioning whether this high volume of AI chips sales is sustainable. Though on the earnings call, Nvidia executives shared some valuable insights regarding Nvidia’s software business, which is vital to understanding how the bull case for the stock is getting stronger. Nexus Research maintains a “buy” rating on the stock.

In the previous article prior to Nvidia’s fiscal Q2 2024 earnings report, we discussed how investors should not be too concerned over supply chain constraints, as no company was better prepared for the artificial intelligence (“AI”) revolution than Nvidia, and that the company has secured sufficient supply of necessary materials to enable it to fulfill customers’ high demand for its AI chips. Given that Nvidia was once again able to offer jaw-dropping guidance of around $16 billion in revenue for Q3, investors can indeed be confident that supply chain constraints are not too big of an issue for the tech giant.

With key rival Advanced Micro Devices, Inc. (AMD) set to start shipping its own AI chip, the MI300, in the fourth quarter, investors are keen to observe whether it can effectively compete against Nvidia. On AMD’s last earnings call, CEO Lisa Su tried to trumpet data center customers’ “strong interest” in its MI300 chip, as well as its less advanced MI250 chip, which it is already shipping. However, AMD is expecting GPU sales of less than $500 million this year, despite the upcoming launch of MI300 in Q4. This tells us that AMD is not benefitting much from the ongoing AI revolution that is already transforming the data center industry.

On the other hand, Nvidia is enjoying enormous demand for its AI chips, and that includes high demand for both the latest H100 GPU (based on Hopper architecture), as well as its older version, the A100 (based on Ampere architecture). On the Q1 2024 earnings call, Nvidia CEO Jensen Huang had shared:

“generative AI large language models are driving the surge in demand, and it’s broad-based across both our consumer Internet companies, our CSPs, our enterprises, and our AI start-ups.

It is also interest in both of our architectures, both of our Hopper latest architecture as well as our Ampere architecture. This is not surprising as we generally often sell both of our architectures at the same time.”

So, in case Hopper is too expensive for customers, they are also opting for Ampere, to a certain extent subduing the risk of customers looking for cheaper alternatives from the likes of AMD. This lead is further extended by the popular CUDA software package, as developers continue to build AI frameworks and applications specifically accelerated by Nvidia’s GPUs.

If Nvidia can continue to foster the software ecosystem around both Hopper and Ampere, it will allow Nvidia to keep these customers knotted in the Nvidia ecosystem. Hence even when AMD launches its cheaper alternative to Nvidia’s AI chips, it will be hard for AMD to attract data center customers without a comparable software toolkit/ ecosystem around its products.

This is how Nvidia’s first-mover advantage materializes itself, and in fact further compounds itself. While AMD tries to play catch up by developing its own AI chip, Nvidia’s lead continues to widen as the software toolkit/ ecosystem around its chips continues to grow. This leads to even more customers opting for Nvidia’s products as the accompanying software makes it increasingly useful across industries, who in turn develop their own software applications around Nvidia’s solutions, and thereby the network effect continues to bolster itself.

AMD is so late to the game, that data center customers that indeed find the price tag of Hopper expensive are having to opt for Ampere, allowing Nvidia to snarl them into its ecosystem with its previous generation products, given that AMD offers no worthwhile alternative yet. A key competitive factor for AMD’s products is having a cheaper price tag relative to Nvidia’s products, which serves as its entry point into the market.

To put it simply, when customers deploying their capex budget find Nvidia’s products unaffordable, it would create an entry point for AMD. However, given that AMD hasn’t even started shipping its advanced AI chip while data centers are already upgrading their infrastructure, AMD is missing its entry point, creating pricing power for Nvidia, as well as creating demand for Nvidia’s previous-generation products in the absence of powerful alternatives. Additionally, the absence of adequate alternatives also allows for Nvidia’s software ecosystem to continue growing rapidly.

But the growth potential of Nvidia’s software revenue benefits from more than lack of competitive alternatives. On the Q2 2024 earnings call, Jensen Huang explained what is driving growth for Nvidia’s software solutions:

“so we have a run time called AI Enterprise. This is one part of our software stack. And this is, if you will, the run time that just about every company uses for the end-to-end of machine learning from data processing, the training of any model that you like to do on any framework you’d like to do, the inference and the deployment, the scaling it out into a data center. It could be a scale-out for a hyperscale data center. It could be a scale-out for enterprise data center, for example, on VMware.

You can do this on any of our GPUs. We have hundreds of millions of GPUs in the field and millions of GPUs in the cloud and just about every single cloud. And it runs in a single GPU configuration as well as multi-GPU per compute or multi-node. It also has multiple sessions or multiple computing instances per GPU. So from multiple instances per GPU to multiple GPUs, multiple nodes to entire data center scale.”

So what does he mean by all this, and how does it boost Nvidia’s software revenue prospects for shareholders?

Let’s start with defining what instances are. “Instances” in the context of GPUs are essentially various sets of partitioned instructions on a single GPU, aimed at allowing the GPU to run different types of workloads simultaneously.

The H100 GPU uses a technology called Multi-Instance GPU:

“Multi-Instance GPU [MIG] expands the performance and value of NVIDIA H100, A100, and A30 Tensor Core GPUs. MIG can partition the GPU into as many as seven instances.”

So the reason Jensen is talking about GPU processing capacity in the context of software is because the more computing instances a GPU can support, the more different types of software applications can be run on it at the same time. This indeed opens up the opportunity for Nvidia to sell more software services.

But it doesn’t stop there. Multiple GPUs are combined together to power customers’ AI workloads such as training/ inferencing. Furthermore, millions of GPUs make up entire data centers. So you can get a sense of the enormous volume of computing and the number instances running simultaneously. Essentially, this amplifies the level of software that can be run across data centers. And the more software applications being run, the more software revenue it generates for Nvidia.

There are various different ways that Nvidia can monetize the use of its software services. Usage-based licenses are common in the software industry, whereby they could charge based on the number of concurrent instances or sessions. If customers run more sessions on a GPU, and if licensing costs are per session, it indeed amplifies the software revenue prospects for Nvidia. Given the high processing capacity of each Nvidia GPU, and then extrapolating that across millions of GPUs to run entire data centers, the software revenue scope is indeed enormous.

Huang went on to say:

“So this run time called NVIDIA AI enterprise has something like 4,500 software packages, software libraries and has something like 10,000 dependencies among each other.”

In other words, when a customer is using certain software packages and libraries as part of NVIDIA AI enterprise (or other Nvidia software platforms), they will inevitably also use other adjacent software packages and libraries offered through Nvidia’s platforms, given the inter-connectedness of these software solutions. This ultimately translates to higher software revenue for Nvidia shareholders.

Furthermore, as long as Nvidia’s GPUs can support more instances than its competitors’ chips, software developers will prioritize developing and optimizing for Nvidia’s GPUs. This is because customers are more likely to spend on software services if their GPUs are able to support more software processing. Hence, this creates a virtuous network effect for Nvidia, whereby the increasing capacity of Nvidia’s GPUs attracts both more customers and software developers, and the growing availability of more software solutions in turn attracts even more customers, and so the cycle continues, to the benefit of Nvidia shareholders.

In fact, Jensen Huang further proclaimed Nvidia’s ability to attract and retain software developers into Nvidia’s ecosystem:

“The second characteristic of our company is the installed base. You have to ask yourself, why is it that all the software developers come to our platform? And the reason for that is because software developers seek a large installed base so that they can reach the largest number of end users, so that they could build a business or get a return on the investments that they make.

And then the third characteristic is reach. We’re in the cloud today, both for public cloud, public-facing cloud because we have so many customers that use — so many developers and customers that use our platform. CSPs are delighted to put it up in the cloud. They use it for internal consumption to develop and train and to operate recommender systems or search or data processing engines and what not all the way to training and inference. And so we’re in the cloud, we’re in enterprise.”

As more and more of the world’s cloud providers and enterprises use Nvidia’s GPUs and software solutions like the Nvidia AI enterprise, it creates an increasingly growing market for software developers to reach. In order for software developers to be able to make a business out of the services they are able to offer, they indeed need the assurance of a large addressable market, and Nvidia’s ecosystem has grown large enough (and continues to grow) for software developers to be able to make a living. As more software developers create software services specifically optimized for Nvidia’s GPUs and ecosystem, the more customers will become inclined to use Nvidia’s technology platform to build their AI applications, and so once again the virtuous network effect continues in favor of Nvidia and its shareholders.

This is what makes it challenging for competitors like AMD to catch up, because not only do they need to produce an AI chip that delivers competitive performance capabilities, but they also need to sell large enough volume of chips to support a large enough software ecosystem around their technology. It will be challenging for competitors to attract a sufficiently large number of software developers and assuring them that they can build a business on their platforms, the way Nvidia has.

Financials & Valuation

Now that we have established a more solid understanding of Nvidia’s software prospects, let us discuss the financial performance implications. Software sales indeed tend to be higher-margin solutions than hardware products, allowing for greater profit margin expansion.

On the last earnings call, CFO Colette Kress shared:

“Now we’re seeing, at this point, probably hundreds of millions of dollars annually for our software business, and we are looking at NVIDIA AI enterprise to be included with many of the products that we’re selling, such as our DGX, such as our PCIe versions of our H100. And I think we’re going to see more availability even with our CSP marketplaces. So we’re off to a great start, and I do believe we’ll see this continue to grow going forward.”

For a company that is projected to generate over $50 billion in revenue this year, a software business generating “hundreds of millions of dollars” of annual revenue will have limited impact on overall profitability. Nonetheless, the CFO did share that:

“GAAP gross margins expanded to 70.1% and non-GAAP gross margin to 71.2%, driven by higher data center sales. Our Data Center products include a significant amount of software and complexity, which is also helping drive our gross margin.”

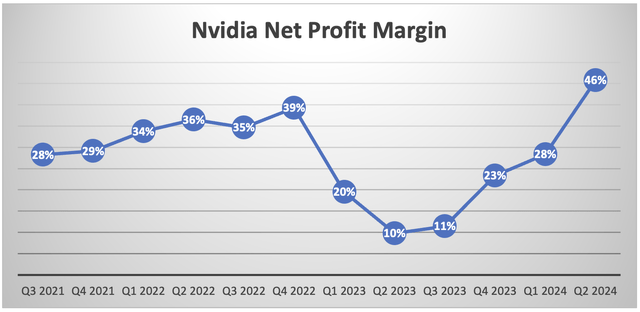

Hence, the software business is already helping expand gross profit margins to a certain extent, and is flowing down to the bottom line to also expand net profit margins. Nvidia’s net margin jumped to a whopping 46% last quarter.

Data Source: Company filings

As software revenue grows to become an increasingly larger proportion of total revenue over time, it should indeed allow for further profit margin expansion going forward, bolstering earnings growth. This is what enables investors to be confident that Nvidia will be able to grow into its expensive valuation multiple.

Nvidia stock currently trades at over 52x next year’s earnings. The bears love to point out just how expensive the stock is, and often focus too much on Nvidia’s sales of AI chips, citing risks like ‘too much demand being pulled forward already’ and ‘competitors/ cloud giants producing their own chips’. And there could indeed be time periods where they are right. Any hint of competitive pressures and/or slowing of chip sales could send the stock plummeting.

But this is no reason not to hold exposure to the stock. While skeptics have sat on the sidelines waiting for a bearish development to unfold, the stock has continued to climb higher, while in fact becoming cheaper based on a price to forward earnings multiple, as the earnings growth is just that monstrous.

Nexus Research recommends starting to buy the stock at 52x forward earnings, and deploying a dollar-cost averaging strategy, in case the stock plummets. Instead of fearing a big correction, investors should approach such a development as an opportunity to buy more. When weak hands that are too focused on AI chip sales sell their stock positions out of fear that the growth story is over, it is an opportunity for long-term investors to double down on the stock, understanding Nvidia’s software moat and growth prospects. Nexus Research maintains a “buy” rating on the stock.