GPUs have been referred to as the uncommon Earth metals — even the gold — of synthetic intelligence, as a result of they’re foundational for in the present day’s generative AI period.

Three technical causes, and lots of tales, clarify why that’s so. Every motive has a number of sides effectively price exploring, however at a excessive stage:

- GPUs make use of parallel processing.

- GPU programs scale as much as supercomputing heights.

- The GPU software program stack for AI is broad and deep.

The online result’s GPUs carry out technical calculations sooner and with higher power effectivity than CPUs. Which means they ship main efficiency for AI coaching and inference in addition to features throughout a big selection of functions that use accelerated computing.

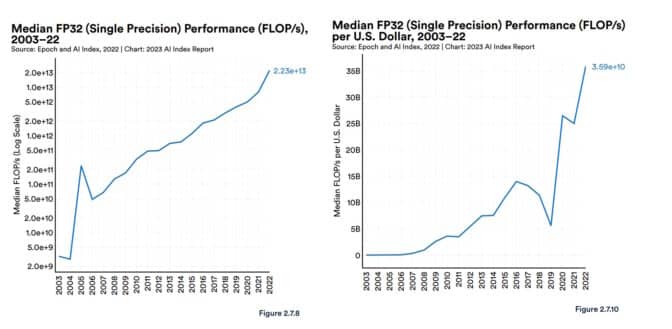

In its latest report on AI, Stanford’s Human-Centered AI group offered some context. GPU efficiency “has elevated roughly 7,000 occasions” since 2003 and worth per efficiency is “5,600 occasions higher,” it reported.

The report additionally cited evaluation from Epoch, an impartial analysis group that measures and forecasts AI advances.

“GPUs are the dominant computing platform for accelerating machine studying workloads, and most (if not all) of the most important fashions over the past 5 years have been educated on GPUs … [they have] thereby centrally contributed to the latest progress in AI,” Epoch mentioned on its web site.

A 2020 research assessing AI expertise for the U.S. authorities drew comparable conclusions.

“We count on [leading-edge] AI chips are one to 3 orders of magnitude less expensive than leading-node CPUs when counting manufacturing and working prices,” it mentioned.

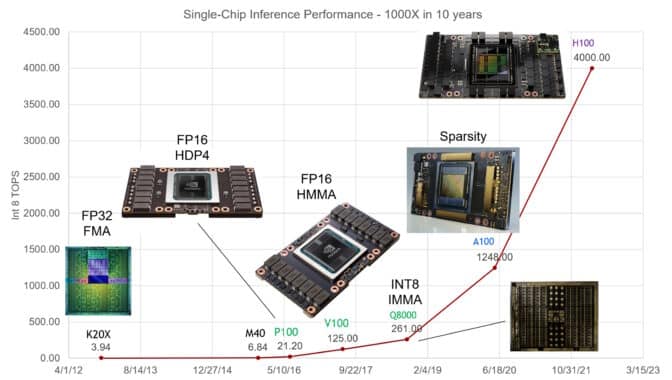

NVIDIA GPUs have elevated efficiency on AI inference 1,000x within the final ten years, mentioned Invoice Dally, the corporate’s chief scientist in a keynote at Sizzling Chips, an annual gathering of semiconductor and programs engineers.

ChatGPT Unfold the Information

ChatGPT offered a strong instance of how GPUs are nice for AI. The big language mannequin (LLM), educated and run on 1000’s of NVIDIA GPUs, runs generative AI companies utilized by greater than 100 million folks.

Since its 2018 launch, MLPerf, the industry-standard benchmark for AI, has offered numbers that element the main efficiency of NVIDIA GPUs on each AI coaching and inference.

For instance, NVIDIA Grace Hopper Superchips swept the newest spherical of inference checks. NVIDIA TensorRT-LLM, inference software program launched since that check, delivers as much as an 8x increase in efficiency and greater than a 5x discount in power use and whole price of possession. Certainly, NVIDIA GPUs have received each spherical of MLPerf coaching and inference checks for the reason that benchmark was launched in 2019.

In February, NVIDIA GPUs delivered main outcomes for inference, serving up 1000’s of inferences per second on essentially the most demanding fashions within the STAC-ML Markets benchmark, a key expertise efficiency gauge for the monetary companies {industry}.

A RedHat software program engineering workforce put it succinctly in a weblog: “GPUs have grow to be the inspiration of synthetic intelligence.”

AI Beneath the Hood

A short look beneath the hood exhibits why GPUs and AI make a strong pairing.

An AI mannequin, additionally referred to as a neural community, is actually a mathematical lasagna, constituted of layer upon layer of linear algebra equations. Every equation represents the probability that one piece of knowledge is expounded to a different.

For his or her half, GPUs pack 1000’s of cores, tiny calculators working in parallel to slice by the mathematics that makes up an AI mannequin. This, at a excessive stage, is how AI computing works.

Extremely Tuned Tensor Cores

Over time, NVIDIA’s engineers have tuned GPU cores to the evolving wants of AI fashions. The most recent GPUs embrace Tensor Cores which might be 60x extra highly effective than the first-generation designs for processing the matrix math neural networks use.

As well as, NVIDIA Hopper Tensor Core GPUs embrace a Transformer Engine that may routinely modify to the optimum precision wanted to course of transformer fashions, the category of neural networks that spawned generative AI.

Alongside the best way, every GPU era has packed extra reminiscence and optimized methods to retailer a whole AI mannequin in a single GPU or set of GPUs.

Fashions Develop, Programs Increase

The complexity of AI fashions is increasing a whopping 10x a yr.

The present state-of-the-art LLM, GPT4, packs greater than a trillion parameters, a metric of its mathematical density. That’s up from lower than 100 million parameters for a well-liked LLM in 2018.

GPU programs have saved tempo by ganging up on the problem. They scale as much as supercomputers, because of their quick NVLink interconnects and NVIDIA Quantum InfiniBand networks.

For instance, the DGX GH200, a large-memory AI supercomputer, combines as much as 256 NVIDIA GH200 Grace Hopper Superchips right into a single data-center-sized GPU with 144 terabytes of shared reminiscence.

Every GH200 superchip is a single server with 72 Arm Neoverse CPU cores and 4 petaflops of AI efficiency. A brand new four-way Grace Hopper programs configuration places in a single compute node a whopping 288 Arm cores and 16 petaflops of AI efficiency with as much as 2.3 terabytes of high-speed reminiscence.

And NVIDIA H200 Tensor Core GPUs introduced in November pack as much as 288 gigabytes of the newest HBM3e reminiscence expertise.

Software program Covers the Waterfront

An increasing ocean of GPU software program has advanced since 2007 to allow each aspect of AI, from deep-tech options to high-level functions.

The NVIDIA AI platform contains a whole lot of software program libraries and apps. The CUDA programming language and the cuDNN-X library for deep studying present a base on prime of which builders have created software program like NVIDIA NeMo, a framework to let customers construct, customise and run inference on their very own generative AI fashions.

Many of those components can be found as open-source software program, the grab-and-go staple of software program builders. Greater than 100 of them are packaged into the NVIDIA AI Enterprise platform for corporations that require full safety and help. More and more, they’re additionally accessible from main cloud service suppliers as APIs and companies on NVIDIA DGX Cloud.

SteerLM, one of many newest AI software program updates for NVIDIA GPUs, lets customers positive tune fashions throughout inference.

A 70x Speedup in 2008

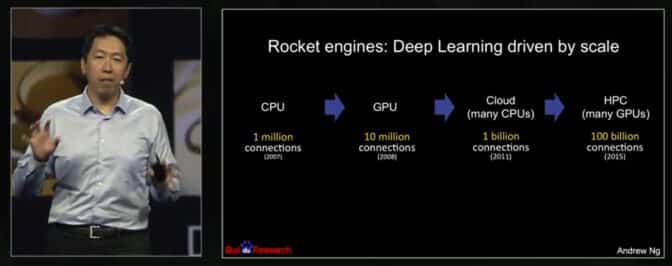

Success tales date again to a 2008 paper from AI pioneer Andrew Ng, then a Stanford researcher. Utilizing two NVIDIA GeForce GTX 280 GPUs, his three-person workforce achieved a 70x speedup over CPUs processing an AI mannequin with 100 million parameters, ending work that used to require a number of weeks in a single day.

“Trendy graphics processors far surpass the computational capabilities of multicore CPUs, and have the potential to revolutionize the applicability of deep unsupervised studying strategies,” they reported.

In a 2015 discuss at NVIDIA GTC, Ng described how he continued utilizing extra GPUs to scale up his work, working bigger fashions at Google Mind and Baidu. Later, he helped discovered Coursera, a web-based training platform the place he taught a whole lot of 1000’s of AI college students.

Ng counts Geoff Hinton, one of many godfathers of contemporary AI, among the many folks he influenced. “I bear in mind going to Geoff Hinton saying take a look at CUDA, I believe it might assist construct larger neural networks,” he mentioned within the GTC discuss.

The College of Toronto professor unfold the phrase. “In 2009, I bear in mind giving a chat at NIPS [now NeurIPS]the place I informed about 1,000 researchers they need to all purchase GPUs as a result of GPUs are going to be the way forward for machine studying,” Hinton mentioned in a press report.

Quick Ahead With GPUs

AI’s features are anticipated to ripple throughout the worldwide financial system.

A McKinsey report in June estimated that generative AI might add the equal of $2.6 trillion to $4.4 trillion yearly throughout the 63 use circumstances it analyzed in industries like banking, healthcare and retail. So, it’s no shock Stanford’s 2023 AI report mentioned {that a} majority of enterprise leaders count on to extend their investments in AI.

Right now, greater than 40,000 corporations use NVIDIA GPUs for AI and accelerated computing, attracting a worldwide group of 4 million builders. Collectively they’re advancing science, healthcare, finance and just about each {industry}.

Among the many newest achievements, NVIDIA described a whopping 700,000x speedup utilizing AI to ease local weather change by retaining carbon dioxide out of the environment (see video beneath). It’s one among some ways NVIDIA is making use of the efficiency of GPUs to AI and past.

Find out how GPUs put AI into manufacturing.