Intel is ramping up into the discharge of its next-gen Xeon “Emerald Rapids” CPU household, with some new data teasing the flagship 64-core, 128-thread Xeon 8592+ processor.

VIEW GALLERY – 4 IMAGES

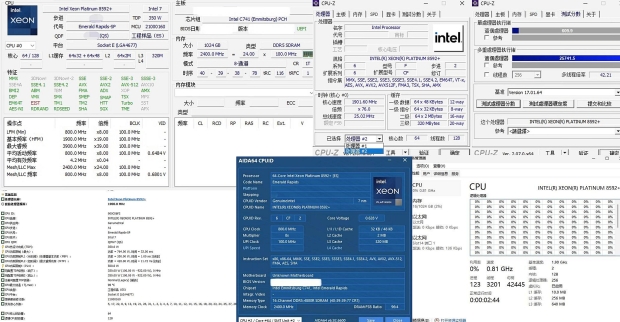

The brand new Intel Xeon 8592+ processor was noticed in its engineering pattern kind, with a CPU base clock of 1.9GHz and a lift clock of three.9GHz, however with this being an ES processor, we must always anticipate increased clocks when the processor is in retail kind. We now have a base TDP of 350W, however it may be adjusted to 420W whereas peak energy will attain 500W. The pre-release pattern appears to have a 922W peak energy, however I hope we do not see that quantity in retail kind.

Intel’s new Emerald Rapids-based Xeon 8592+ processor could have 448MB of mixed cache (120MB L2 cache + 320MB of L3 cache), which is a close to doubling over the 232MB of cache on the previous-gen flagship Xeon 8490H “Sapphire Rapids” CPU.

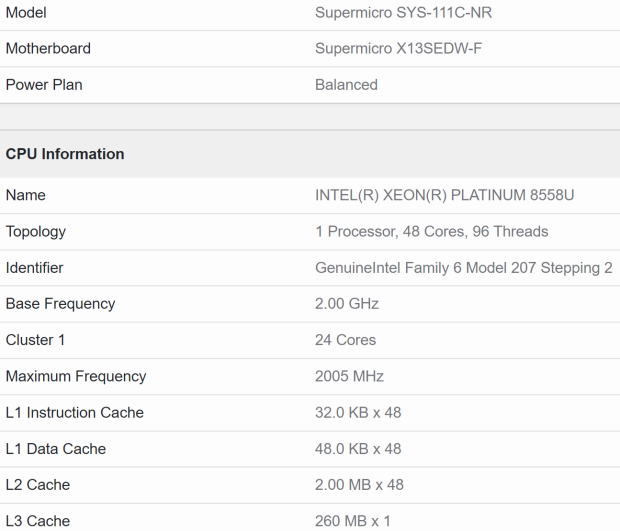

The brand new 64-core, 128-thread processor within the Xeon 8592+ CPU leak was joined by the Xeon 8558U processor that hit Geekbench lately, rolling out from Intel as a brand new 48-core, 96-thread Xeon “Emerald Rapids” processor. We may anticipate a base clock of two.0GHz, with 356MB of mixed cache, over 150MB+ extra cache than its Sapphire Rapids counterpart.

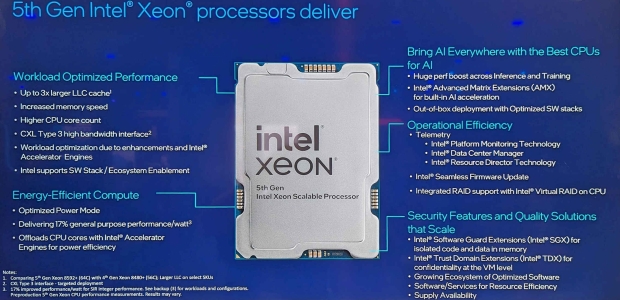

As for the advantages of the brand new fifth Technology Xeon Scalable platform, we’re to anticipate as much as 3x LLC cache, increased DDR5 reminiscence help, CXL Kind 3 help, and a double-digit enhance when it comes to efficiency per watt. Intel may even have a bigger core depend with its new Emerald Rapids processors versus the earlier limits of Sapphire Rapids.

Intel is promising energy-efficient compute efficiency, with an optimized efficiency mode that delivers as much as 17% common function performance-per-watt, which offloads CPU cores with Intel Accelerator Engines for energy effectivity.

The world of AI is, after all, entrance and middle: with AI In every single place with the Finest CPUs for AI being pushed by Intel. We’re to anticipate a “large” efficiency enhance throughout Inference and Coaching AI workloads, Intel Superior Matrix Extensions (AMX) for built-in AI acceleration, and out-of-the-box deployment with Optimized SW stacks. Intel has AI lined with Emeralds Rapids.

Sounds good, proper?

Effectively, the elephant within the room must be the truth that AMD has its just-announced Ryzen Threadripper and Ryzen Threadripper PRO 7000 collection CPUs… in addition to the core-busting EPYC processors, AMD has extra cores, extra threads, extra clocks, they usually’re gaining momentum within the server, information middle, and AI worlds.

AMD has its flagship Ryzen Threadripper PRO 7995WX processors with 96 cores and 192 threads of Zen 4-powered CPU efficiency accessible for HEDT platforms… in your desk. The server, information middle, and AI aspect of issues can scale even increased with AMD providing its EPYC household of processors led by the AMD EPYC “Bergamo” CPU that has 128 cores and 256 threads of CPU energy. Intel is beat earlier than they’ve even launched their new Xeon “Emerald Rapids” processors.