TensorRT-LLM offers 8x greater efficiency for AI inferencing on NVIDIA {hardware}.

As corporations like d-Matrix squeeze into the profitable synthetic intelligence market with coveted inferencing infrastructure, AI chief NVIDIA right now introduced TensorRT-LLM software program, a library of LLM inference tech designed to hurry up AI inference processing.

Leap to:

What’s TensorRT-LLM?

TensorRT-LLM is an open-source library that runs on NVIDIA Tensor Core GPUs. It’s designed to offer builders an area to experiment with constructing new massive language fashions, the bedrock of generative AI like ChatGPT.

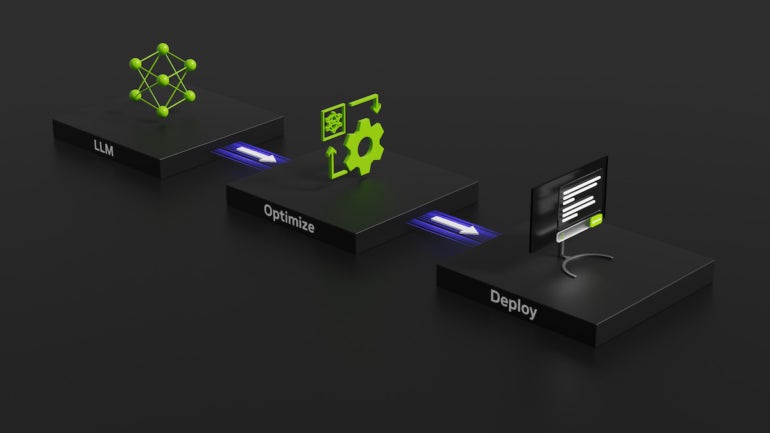

Particularly, TensorRT-LLM covers inference — a refinement of an AI’s coaching or the best way the system learns easy methods to join ideas and make predictions — and defining, optimizing and executing LLMs. TensorRT-LLM goals to hurry up how briskly inference could be carried out on NVIDIA GPUS, NVIDIA mentioned.

TensorRT-LLM will likely be used to construct variations of right now’s heavyweight LLMs like Meta Llama 2, OpenAI GPT-2 and GPT-3, Falcon, Mosaic MPT, BLOOM and others.

To do that, TensorRT-LLM contains the TensorRT deep studying compiler, optimized kernels, pre- and post-processing, multi-GPU and multi-node communication and an open-source Python utility programming interface.

NVIDIA notes that a part of the enchantment is that builders don’t want deep data of C++ or NVIDIA CUDA to work with TensorRT-LLM.

SEE: Microsoft presents free coursework for individuals who need to learn to apply generative AI to their enterprise. (TechRepublic)

“TensorRT-LLM is simple to make use of; feature-packed with streaming of tokens, in-flight batching, paged-attention, quantization and extra; and is environment friendly,” Naveen Rao, vp of engineering at Databricks, informed NVIDIA within the press launch. “It delivers state-of-the-art efficiency for LLM serving utilizing NVIDIA GPUs and permits us to cross on the fee financial savings to our prospects.”

Databricks was among the many corporations given an early have a look at TensorRT-LLM.

Early entry to TensorRT-LLM is obtainable now for individuals who have signed up for the NVIDIA Developer Program. NVIDIA says will probably be out there for wider launch “within the coming weeks,” in response to the preliminary press launch.

How TensorRT-LLM improves efficiency on NVIDIA GPUs

LLMs performing article summarization accomplish that quicker on TensorRT-LLM and a NVIDIA H100 GPU in comparison with the identical process on a previous-generation NVIDIA A100 chip with out the LLM library, NVIDIA mentioned. With simply the H100, the efficiency of GPT-J 6B LLM inferencing noticed a 4 occasions soar in enchancment. The TensorRT-LLM software program introduced an 8 occasions enchancment.

Particularly, the inference could be finished shortly as a result of TensorRT-LLM makes use of a method that splits totally different weight matrices throughout units. (Weighting teaches an AI mannequin which digital neurons needs to be related to one another.) Referred to as tensor parallelism, the approach means inference could be carried out in parallel throughout a number of GPUs and throughout a number of servers on the identical time.

In-flight batching improves the effectivity of the inference, NVIDIA mentioned. Put merely, accomplished batches of generated textual content could be produced separately as a substitute of abruptly. In-flight batching and different optimizations are designed to enhance GPU utilization and reduce down on the overall value of possession.

NVIDIA’s plan to scale back whole value of AI possession

LLM use is dear. Actually, LLMs change the best way knowledge facilities and AI coaching match into an organization’s steadiness sheet, NVIDIA instructed. The concept behind TensorRT-LLM is that corporations will be capable of construct complicated generative AI with out the overall value of possession skyrocketing.